Installing services with ArgoCD¶

Overview¶

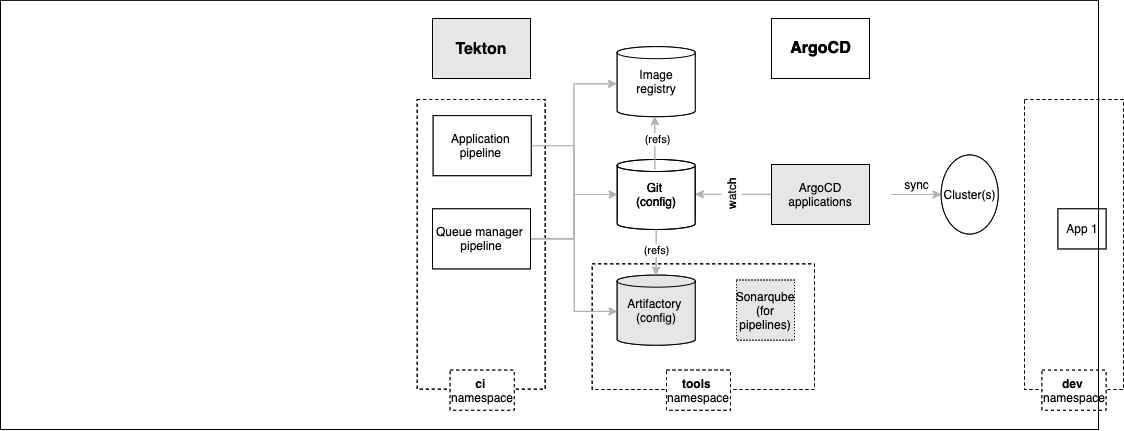

In the previous section of this chapter, we used GitOps to create the ArgoCD applications that installed and managed the ci, tools and dev namespaces in the cluster.

In this section we're going complete the installation of all the necessary services required by our CP4S CICD process:

We'll examine these highlighted components in more detail throughout this section of the tutorial; here's an overview of their function.

Tektonis used for Continuous Integration. Often, the Tekton pipeline will perform its changes under a pull-request (PR) to provide an explicit approval mechanism or through a push based on the requirements for cluster changes.Artifactorywill provide a store for CP4S application and queue manager build artifacts such as Helm charts. It is used in conjunction with the GitOps repository and image registry.Sonarqubeis used by the CP4S application pipeline for code quality and security scanning. It helps ensure the quality of a deployed application.ArgoCD applicationswill be created for each of these resources. Specifically, ArgoCD applications will keep the cluster synchronized with the application and queue manager YAML definitions stored in Artifactory and Git.

Note how these application services are installed in the ci and tools namespaces we created in the previous topic.

This section will reinforce our understanding of GitOps. We will then be ready to create CP4S applications that use the infrastructure we previously created and these services we are going to install further.

In this topic, we're going to:

- Deploy services to the cluster using GitOps

- Explore the ArgoCD applications that manage these services

- Explore how more complex services work using

Artifactoryas an example - Review how ArgoCD projects work

- See how

infraandservicesArgoCD applications manage the infrastructure and services layers in our architecture.

By the end of this topic we'll have a fully configured cluster which is ready for us to deploy CP4S applications and queue managers.

Pre-requisites¶

Before attempting this section, you must have completed the following tasks:

- You have created an OCP cluster instance.

- You have installed on your local machine the

occommand that matches the version of your cluster. - You have installed

npm,gitandtreecommands. - You have completed the tutorial section to customize the GitOps repository, and install ArgoCD.

- You have completed the tutorial section to create the

ci,toolsanddevnamespaces using GitOps.

Please see the previous sections of this guide for information on how to do these tasks.

Video Walkthrough¶

This video demonstrates how to install Tekton. It also shows how to use the GitOps repository to set up different service related components.

This is a video walkthrough and it takes you step by step through the below sections.

Post cluster provisioning tasks¶

Red Hat OpenShift cluster¶

- An OpenShift v4.7+ cluster is required.

CLI tools¶

-

Install the OpenShift CLI oc (version 4.7+) . The binary can be downloaded from the Help menu from the OpenShift Console.

Download oc cli

-

Log in from a terminal window.

oc login --token=<token> --server=<server>

IBM Entitlement Key¶

-

The

IBM Entitlement Keyis required to pull IBM Cloud Pak specific container images from the IBM Entitled Registry. To get an entitlement key,- Log in to MyIBM Container Software Library with an IBMid and password associated with the entitled software.

- Select the View library option to verify your entitlement(s).

- Select the Get entitlement key to retrieve the key.

-

In the following command, replace

<entitlement_key>with the value ofIBM Entitlement Keyretrieved in the previous step.export IBM_ENTITLEMENT_KEY=<entitlement_key> -

A Secret containing the entitlement key is created in the

toolsnamespace.oc new-project tools || true oc create secret docker-registry ibm-entitlement-key -n tools \ --docker-username=cp \ --docker-password="$IBM_ENTITLEMENT_KEY" \ --docker-server=cp.icr.iowhich confirms that the

ibm-entitlement-keysecret has been created:secret/ibm-entitlement-key created

Installing Tekton for GitOps¶

Tekton is made available to your Red Hat OpenShift cluster through the Red Hat OpenShift Pipelines operator. Let's see how to get that operator installed on your cluster.

-

Ensure environment variables are set

Tip

If you're returning to the tutorial after restarting your computer, ensure that the $GIT_ORG, $GIT_BRANCH and $GIT_ROOT environment variables are set.

(Replace

<your organization name>appropriately:)export GIT_BRANCH=master export GIT_ORG=<your organization name> export GIT_ROOT=$HOME/git/$GIT_ORG-rootYou can verify your environment variables as follows:

echo $GIT_BRANCH echo $GIT_ORG echo $GIT_ROOT -

Ensure you're logged in to the cluster

Log into your OCP cluster, substituting the

--tokenand--serverparameters with your values:oc login --token=<token> --server=<server>If you are unsure of these values, click your user ID in the OpenShift web console and select "Copy Login Command".

-

Locate your GitOps repository

If necessary, change to the root of your GitOps repository, which is stored in the

$GIT_ROOTenvironment variable.Issue the following command to change to your GitOps repository:

cd $GIT_ROOT cd multi-tenancy-gitops -

Install Tekton into the cluster

We use the Red Hat Pipelines operator to install Tekton into the cluster. The sample repository contains the YAML necessary to do this. We’ll examine it later, but first let’s use it.

Open

0-bootstrap/single-cluster/2-services/kustomization.yamland uncomment the below resources:- argocd/operators/openshift-pipelines.yamlYour

kustomization.yamlfor services should match the following:resources: # IBM Software ## Cloud Pak for Integration #- argocd/operators/ibm-ace-operator.yaml #- argocd/operators/ibm-apic-operator.yaml #- argocd/instances/ibm-apic-instance.yaml #- argocd/instances/ibm-apic-management-portal-instance.yaml #- argocd/instances/ibm-apic-gateway-analytics-instance.yaml #- argocd/operators/ibm-aspera-operator.yaml #- argocd/operators/ibm-assetrepository-operator.yaml #- argocd/operators/ibm-cp4i-operators.yaml #- argocd/operators/ibm-datapower-operator.yaml #- argocd/operators/ibm-eventstreams-operator.yaml #- argocd/operators/ibm-mq-operator.yaml #- argocd/operators/ibm-opsdashboard-operator.yaml #- argocd/operators/ibm-platform-navigator.yaml #- argocd/instances/ibm-platform-navigator-instance.yaml ## Cloud Pak for Business Automation #- argocd/operators/ibm-cp4a-operator.yaml #- argocd/operators/ibm-db2u-operator.yaml #- argocd/operators/ibm-process-mining-operator.yaml #- argocd/instances/ibm-process-mining-instance.yaml ## Cloud Pak for Data #- argocd/operators/ibm-cp4d-watson-studio-operator.yaml #- argocd/instances/ibm-cp4d-watson-studio-instance.yaml #- argocd/operators/ibm-cpd-platform-operator.yaml #- argocd/operators/ibm-cpd-scheduling-operator.yaml #- argocd/instances/ibm-cpd-instance.yaml ## Cloud Pak for Security #- argocd/operators/ibm-cp4s-operator.yaml #- argocd/instances/ibm-cp4sthreatmanagements-instance.yaml ## IBM Foundational Services / Common Services #- argocd/operators/ibm-foundations.yaml #- argocd/instances/ibm-foundational-services-instance.yaml #- argocd/operators/ibm-automation-foundation-core-operator.yaml #- argocd/operators/ibm-automation-foundation-operator.yaml ## IBM Catalogs #- argocd/operators/ibm-catalogs.yaml # Required for IBM MQ #- argocd/instances/openldap.yaml # Required for IBM ACE, IBM MQ #- argocd/operators/cert-manager.yaml #- argocd/instances/cert-manager-instance.yaml # Sealed Secrets #- argocd/instances/sealed-secrets.yaml # CICD #- argocd/operators/grafana-operator.yaml #- argocd/instances/grafana-instance.yaml #- argocd/instances/artifactory.yaml #- argocd/instances/chartmuseum.yaml #- argocd/instances/developer-dashboard.yaml #- argocd/instances/swaggereditor.yaml #- argocd/instances/sonarqube.yaml #- argocd/instances/pact-broker.yaml # In OCP 4.7+ we need to install openshift-pipelines and possibly privileged scc to the pipeline serviceaccount - argocd/operators/openshift-pipelines.yaml # Service Mesh #- argocd/operators/elasticsearch.yaml #- argocd/operators/jaeger.yaml #- argocd/operators/kiali.yaml #- argocd/operators/openshift-service-mesh.yaml #- argocd/instances/openshift-service-mesh-instance.yaml # Monitoring #- argocd/instances/instana-agent.yaml #- argocd/instances/instana-robot-shop.yaml # Spectrum Protect Plus #- argocd/operators/spp-catalog.yaml #- argocd/operators/spp-operator.yaml #- argocd/instances/spp-instance.yaml #- argocd/operators/oadp-operator.yaml #- argocd/instances/oadp-instance.yaml #- argocd/instances/baas-instance.yaml patches: - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=services,gitops.tier.source=git" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops-services.git - op: add path: /spec/source/targetRevision value: master - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=applications,gitops.tier.source=git" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops-apps.git - op: add path: /spec/source/targetRevision value: master - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=services,gitops.tier.source=helm" patch: |- - op: add path: /spec/source/repoURL value: https://charts.cloudnativetoolkit.dev - target: name: ibm-automation-foundation-operator patch: |- - op: add path: /spec/source/helm/parameters/- value: name: spec.channel value: v1.1Commit and push changes to your git repository:

git add . git commit -s -m "Install tekton using Red Hat OpenShift Pipelines Operator" git push origin $GIT_BRANCHThe changes have now been pushed to your GitOps repository:

Enumerating objects: 11, done. Counting objects: 100% (11/11), done. Delta compression using up to 8 threads Compressing objects: 100% (6/6), done. Writing objects: 100% (6/6), 524 bytes | 524.00 KiB/s, done. Total 6 (delta 5), reused 0 (delta 0) remote: Resolving deltas: 100% (5/5), completed with 5 local objects. To https://github.com/prod-ref-guide/multi-tenancy-gitops.git 85a4c46..61e15b0 master -> master -

Activate the services in the GitOps repo

Access the

0-bootstrap/single-cluster/kustomization.yaml:cat 0-bootstrap/single-cluster/kustomization.yamlLet us only deploy

servicesresources to the cluster. Open0-bootstrap/single-cluster/kustomization.yamland uncomment2-services/2-services.yamlas follows:resources: - 1-infra/1-infra.yaml - 2-services/2-services.yaml # - 3-apps/3-apps.yaml patches: - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=gitops" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/source/targetRevision value: master - target: group: argoproj.io kind: AppProject labelSelector: "gitops.tier.layer=infra" patch: |- - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops-infra.git - target: group: argoproj.io kind: AppProject labelSelector: "gitops.tier.layer=services" patch: |- - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops-services.git - target: group: argoproj.io kind: AppProject labelSelector: "gitops.tier.layer=applications" patch: |- - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops-apps.gitOnce we push this change to GitHub, it will be seen by the

bootstrap-single-clusterapplication in ArgoCD, and the resources it refers to will be applied to the cluster. -

Push GitOps changes to GitHub

Let’s make these GitOps changes visible to the ArgoCD

bootstrap-single-clusterapplication via GitHub.Add all changes in the current folder to a git index, commit them, and push them to GitHub:

git add . git commit -s -m "Deploying services" git push origin $GIT_BRANCHThe changes have now been pushed to your GitOps repository:

Enumerating objects: 9, done. Counting objects: 100% (9/9), done. Delta compression using up to 8 threads Compressing objects: 100% (5/5), done. Writing objects: 100% (5/5), 431 bytes | 431.00 KiB/s, done. Total 5 (delta 4), reused 0 (delta 0) remote: Resolving deltas: 100% (4/4), completed with 4 local objects. To https://github.com/prod-ref-guide/multi-tenancy-gitops.git 533602c..85a4c46 master -> masterThis change to the GitOps repository can now be used by ArgoCD.

-

The

bootstrap-single-clusterapplication detects the change and resyncsOnce these changes to our GitOps repository are seen by ArgoCD, it will resync the cluster to the desired new state.

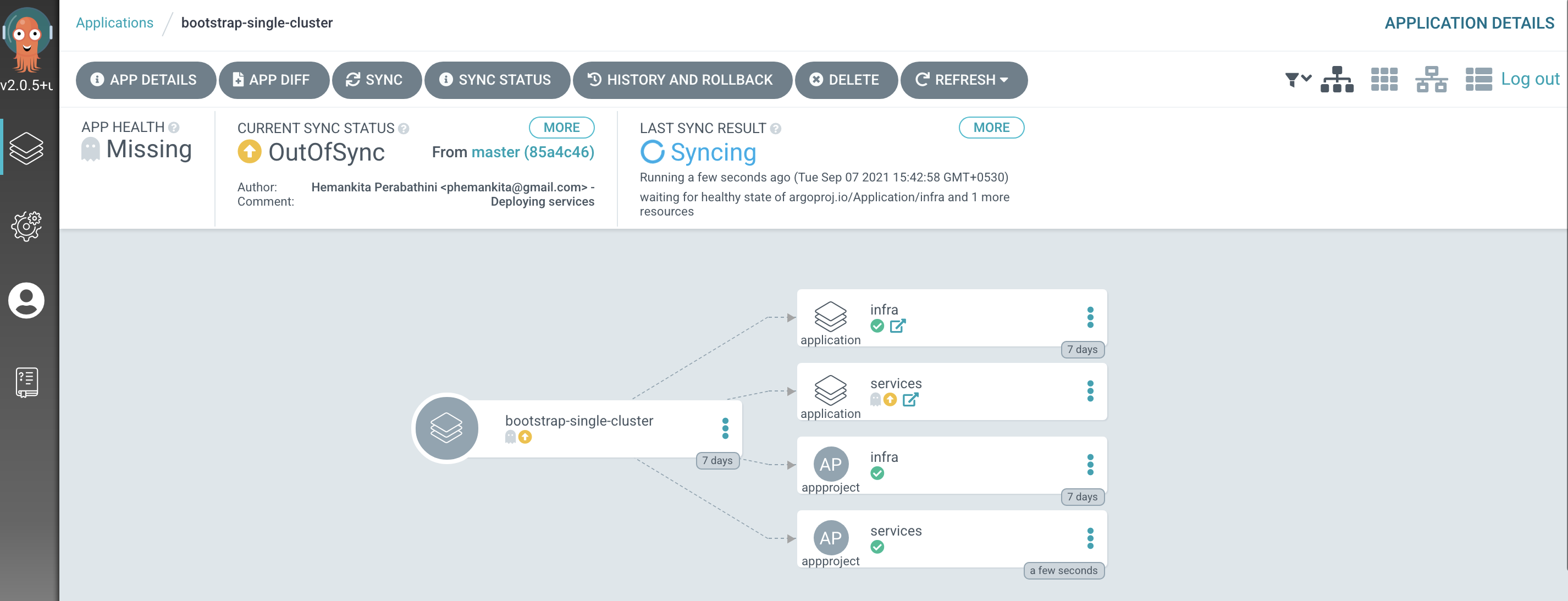

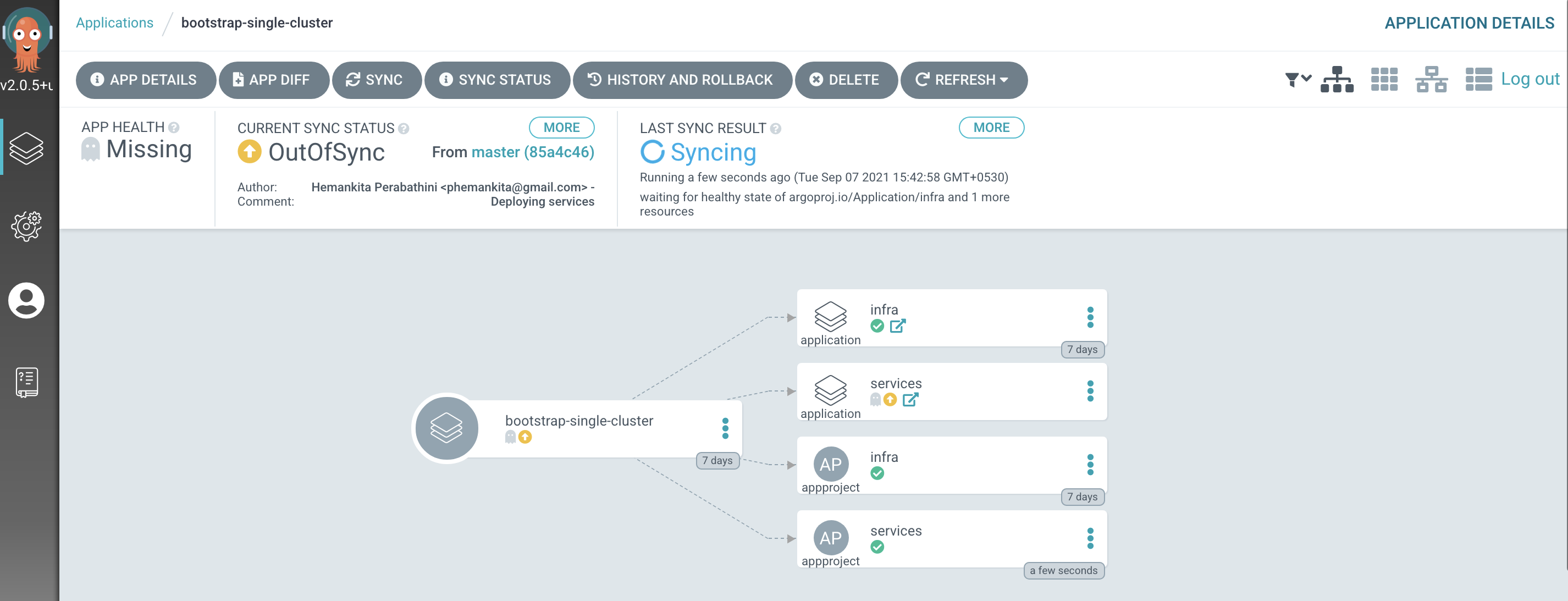

Switch to the ArgoCD UI Applications view to see the start of this resync process:

Notice how the

bootstrap-single-clusterapplication has detected the changes and is automatically synching the cluster.(You can manually

syncthebootstrap-single-clusterArgoCD application in the UI if you don't want to wait for ArgoCD to detect the change.) -

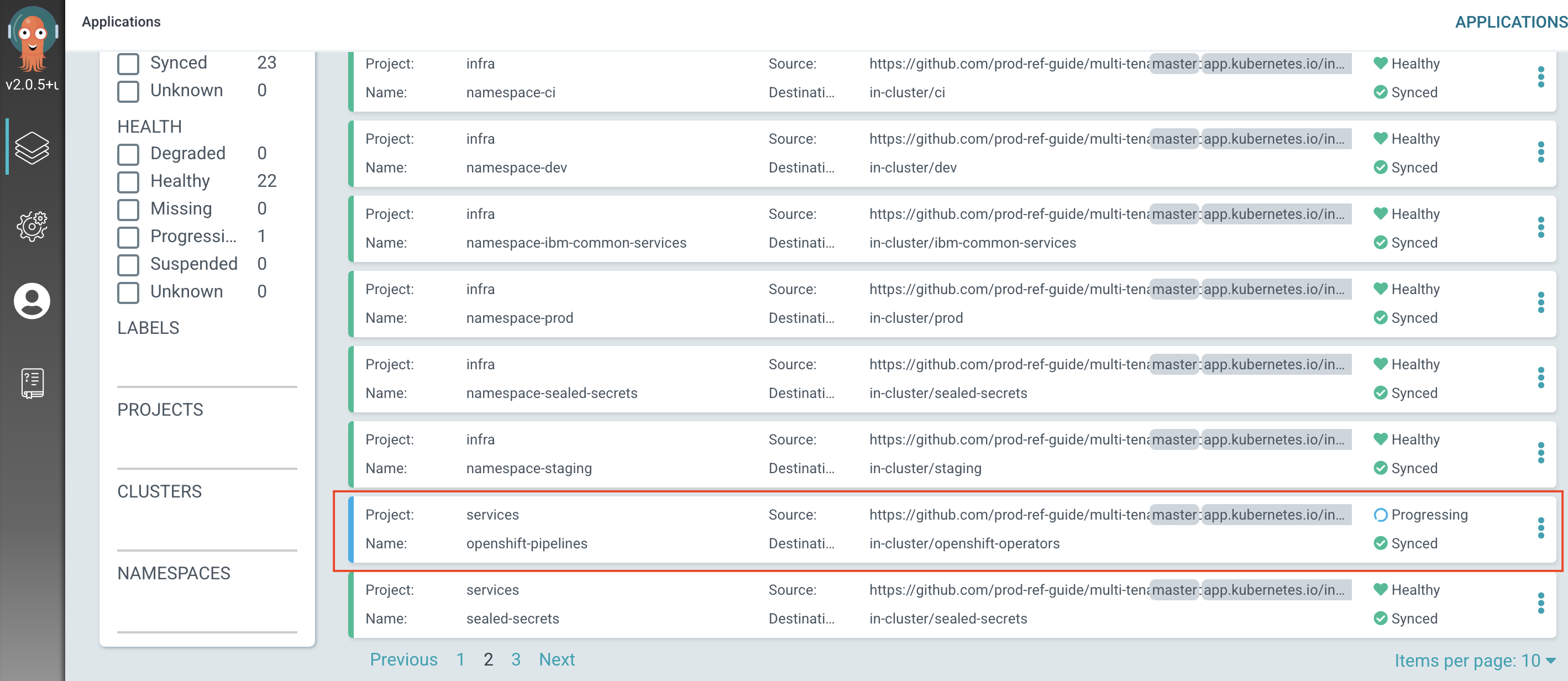

The new ArgoCD applications

After a short while, you'll see

openshift-pipelinesArgoCD application have been created as follows:

-

Wait for the Tekton installation to complete

Installation is an asynchronous process, so we can issue a command that will complete when installation is done.

Wait 30 seconds for the installation to get started, then issue the following command:

sleep 30; oc wait --for condition=available --timeout 60s deployment.apps/openshift-pipelines-operator -n openshift-operatorsAfter a while, you should see the following message informing us that operator installation is complete:

deployment.apps/openshift-pipelines-operator condition metTekton is now installed and ready to use. We’ll explore Tekton in later topics.

If you see something like:

Error from server (NotFound): deployments.apps "openshift-pipelines-operator" not foundthen re-issue the command; the error occurred because the subscription took a little longer to create than expected.

Deploy services to the cluster¶

We've just had our first successful GitOps experience, using an ArgoCD application to create the ci, tools and dev namespaces in our cluster at the infrastructure layer level as well as using ArgoCD to deploy the Red Hat OpenShift Pipelines operator at the services layer level. There are few more components you need to create for this IBM CP4S tutorial.

Edit the CP4SThreatManagements custom resource instance and specify a block or file storage class ${GITOPS_PROFILE}/2-services/argocd/instances/ibm-cp4sthreatmanagements-instance.yaml. The default is set to managed-nfs-storage.

- name: spec.basicDeploymentConfiguration.storageClass

value: managed-nfs-storage

- name: spec.extendedDeploymentConfiguration.backupStorageClass

value: managed-nfs-storage

These components are part of the services layer in our architecture, and that requires us to access /0-bootstrap/single-cluster/2-services within our GitOps repository.

-

Check services layer components

As we saw earlier, the

bootstrap-single-clusterapplication uses the contents of the/0-bootstrap/single-cluster/2-servicesfolder to determine which Kubernetes resources are available to be deployed in the cluster.Issue the following command to see what could be deployed in the cluster:

tree 0-bootstrap/single-cluster/2-services/ -L 3You should see a set of operators and instances of these available in the GitOps framework at the services layer:

0-bootstrap/single-cluster/2-services/ ├── 2-services.yaml ├── argocd │ ├── instances │ │ ├── artifactory.yaml │ │ ├── baas-instance.yaml │ │ ├── cert-manager-instance.yaml │ │ ├── chartmuseum.yaml │ │ ├── developer-dashboard.yaml │ │ ├── grafana-instance.yaml │ │ ├── ibm-apic-gateway-analytics-instance.yaml │ │ ├── ibm-apic-instance.yaml │ │ ├── ibm-apic-management-portal-instance.yaml │ │ ├── ibm-cp4d-watson-studio-instance.yaml │ │ ├── ibm-cp4sthreatmanagements-instance.yaml │ │ ├── ibm-cpd-instance.yaml │ │ ├── ibm-foundational-services-instance.yaml │ │ ├── ibm-platform-navigator-instance.yaml │ │ ├── ibm-process-mining-instance.yaml │ │ ├── instana-agent.yaml │ │ ├── instana-robot-shop.yaml │ │ ├── oadp-instance.yaml │ │ ├── openldap.yaml │ │ ├── openshift-service-mesh-instance.yaml │ │ ├── pact-broker.yaml │ │ ├── sealed-secrets.yaml │ │ ├── sonarqube.yaml │ │ ├── spp-instance.yaml │ │ └── swaggereditor.yaml │ └── operators │ ├── cert-manager.yaml │ ├── elasticsearch.yaml │ ├── grafana-operator.yaml │ ├── ibm-ace-operator.yaml │ ├── ibm-apic-operator.yaml │ ├── ibm-aspera-operator.yaml │ ├── ibm-assetrepository-operator.yaml │ ├── ibm-automation-foundation-core-operator.yaml │ ├── ibm-automation-foundation-operator.yaml │ ├── ibm-catalogs.yaml │ ├── ibm-cp4a-operator.yaml │ ├── ibm-cp4d-watson-studio-operator.yaml │ ├── ibm-cp4i-operators.yaml │ ├── ibm-cp4s-operator.yaml │ ├── ibm-cpd-platform-operator.yaml │ ├── ibm-cpd-scheduling-operator.yaml │ ├── ibm-datapower-operator.yaml │ ├── ibm-db2u-operator.yaml │ ├── ibm-eventstreams-operator.yaml │ ├── ibm-foundations.yaml │ ├── ibm-mq-operator.yaml │ ├── ibm-opsdashboard-operator.yaml │ ├── ibm-platform-navigator.yaml │ ├── ibm-process-mining-operator.yaml │ ├── jaeger.yaml │ ├── kiali.yaml │ ├── oadp-operator.yaml │ ├── openshift-gitops.yaml │ ├── openshift-pipelines.yaml │ ├── openshift-service-mesh.yaml │ ├── spp-catalog.yaml │ └── spp-operator.yaml └── kustomization.yaml -

Review ArgoCD services folder

Let’s examine the

0-bootstrap/single-cluster/2-services/kustomization.yamlto see how ArgoCD manages the resources deployed to the cluster.Issue the following command:

cat 0-bootstrap/single-cluster/2-services/kustomization.yamlWe can see the contents of the

kustomization.yaml:resources: # IBM Software ## Cloud Pak for Integration #- argocd/operators/ibm-ace-operator.yaml #- argocd/operators/ibm-apic-operator.yaml #- argocd/instances/ibm-apic-instance.yaml #- argocd/instances/ibm-apic-management-portal-instance.yaml #- argocd/instances/ibm-apic-gateway-analytics-instance.yaml #- argocd/operators/ibm-aspera-operator.yaml #- argocd/operators/ibm-assetrepository-operator.yaml #- argocd/operators/ibm-cp4i-operators.yaml #- argocd/operators/ibm-datapower-operator.yaml #- argocd/operators/ibm-eventstreams-operator.yaml #- argocd/operators/ibm-mq-operator.yaml #- argocd/operators/ibm-opsdashboard-operator.yaml #- argocd/operators/ibm-platform-navigator.yaml #- argocd/instances/ibm-platform-navigator-instance.yaml ## Cloud Pak for Business Automation #- argocd/operators/ibm-cp4a-operator.yaml #- argocd/operators/ibm-db2u-operator.yaml #- argocd/operators/ibm-process-mining-operator.yaml #- argocd/instances/ibm-process-mining-instance.yaml ## Cloud Pak for Data #- argocd/operators/ibm-cp4d-watson-studio-operator.yaml #- argocd/instances/ibm-cp4d-watson-studio-instance.yaml #- argocd/operators/ibm-cpd-platform-operator.yaml #- argocd/operators/ibm-cpd-scheduling-operator.yaml #- argocd/instances/ibm-cpd-instance.yaml ## Cloud Pak for Security #- argocd/operators/ibm-cp4s-operator.yaml #- argocd/instances/ibm-cp4sthreatmanagements-instance.yaml ## IBM Foundational Services / Common Services #- argocd/operators/ibm-foundations.yaml #- argocd/instances/ibm-foundational-services-instance.yaml #- argocd/operators/ibm-automation-foundation-core-operator.yaml #- argocd/operators/ibm-automation-foundation-operator.yaml ## IBM Catalogs #- argocd/operators/ibm-catalogs.yaml # Required for IBM MQ #- argocd/instances/openldap.yaml # Required for IBM ACE, IBM MQ #- argocd/operators/cert-manager.yaml #- argocd/instances/cert-manager-instance.yaml # Sealed Secrets #- argocd/instances/sealed-secrets.yaml # CICD #- argocd/operators/grafana-operator.yaml #- argocd/instances/grafana-instance.yaml #- argocd/instances/artifactory.yaml #- argocd/instances/chartmuseum.yaml #- argocd/instances/developer-dashboard.yaml #- argocd/instances/swaggereditor.yaml #- argocd/instances/sonarqube.yaml #- argocd/instances/pact-broker.yaml # In OCP 4.7+ we need to install openshift-pipelines and possibly privileged scc to the pipeline serviceaccount - argocd/operators/openshift-pipelines.yaml # Service Mesh #- argocd/operators/elasticsearch.yaml #- argocd/operators/jaeger.yaml #- argocd/operators/kiali.yaml #- argocd/operators/openshift-service-mesh.yaml #- argocd/instances/openshift-service-mesh-instance.yaml # Monitoring #- argocd/instances/instana-agent.yaml #- argocd/instances/instana-robot-shop.yaml # Spectrum Protect Plus #- argocd/operators/spp-catalog.yaml #- argocd/operators/spp-operator.yaml #- argocd/instances/spp-instance.yaml #- argocd/operators/oadp-operator.yaml #- argocd/instances/oadp-instance.yaml #- argocd/instances/baas-instance.yaml patches: - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=services,gitops.tier.source=git" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops-services.git - op: add path: /spec/source/targetRevision value: master - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=applications,gitops.tier.source=git" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops-apps.git - op: add path: /spec/source/targetRevision value: master - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=services,gitops.tier.source=helm" patch: |- - op: add path: /spec/source/repoURL value: https://charts.cloudnativetoolkit.dev - target: name: ibm-automation-foundation-operator patch: |- - op: add path: /spec/source/helm/parameters/- value: name: spec.channel value: v1.1 -

Add the services to the cluster

Note

The

IBM Platform Navigatorinstance requires a RWX storageclass and it is set tomanaged-nfs-storageby default in the ArgoCD Application0-bootstrap/single-cluster/2-services/argocd/instances/ibm-platform-navigator-instance.yaml. This storageclass is available for Red Hat OpenShift on IBM Cloud cluster provisioned from IBM Technology Zone with NFS storage selected.Open

0-bootstrap/single-cluster/2-services/kustomization.yamland uncomment the below resources:## Cloud Pak for Security - argocd/operators/ibm-cp4s-operator.yaml - argocd/instances/ibm-cp4sthreatmanagements-instance.yaml ## IBM Foundational Services / Common Services - argocd/operators/ibm-foundations.yaml - argocd/instances/ibm-foundational-services-instance.yaml - argocd/operators/ibm-automation-foundation-core-operator.yaml - argocd/operators/ibm-automation-foundation-operator.yaml ## IBM Catalogs - argocd/operators/ibm-catalogs.yaml # Sealed Secrets #- argocd/instances/sealed-secrets.yaml # CICD #- argocd/instances/artifactory.yaml #- argocd/instances/sonarqube.yamlYou will have the following resources deployed for services:

resources: # IBM Software ## Cloud Pak for Integration #- argocd/operators/ibm-ace-operator.yaml #- argocd/operators/ibm-apic-operator.yaml #- argocd/instances/ibm-apic-instance.yaml #- argocd/instances/ibm-apic-management-portal-instance.yaml #- argocd/instances/ibm-apic-gateway-analytics-instance.yaml #- argocd/operators/ibm-aspera-operator.yaml #- argocd/operators/ibm-assetrepository-operator.yaml #- argocd/operators/ibm-cp4i-operators.yaml #- argocd/operators/ibm-datapower-operator.yaml #- argocd/operators/ibm-eventstreams-operator.yaml #- argocd/operators/ibm-mq-operator.yaml #- argocd/operators/ibm-opsdashboard-operator.yaml #- argocd/operators/ibm-platform-navigator.yaml #- argocd/instances/ibm-platform-navigator-instance.yaml ## Cloud Pak for Business Automation #- argocd/operators/ibm-cp4a-operator.yaml #- argocd/operators/ibm-db2u-operator.yaml #- argocd/operators/ibm-process-mining-operator.yaml #- argocd/instances/ibm-process-mining-instance.yaml ## Cloud Pak for Data #- argocd/operators/ibm-cp4d-watson-studio-operator.yaml #- argocd/instances/ibm-cp4d-watson-studio-instance.yaml #- argocd/operators/ibm-cpd-platform-operator.yaml #- argocd/operators/ibm-cpd-scheduling-operator.yaml #- argocd/instances/ibm-cpd-instance.yaml ## Cloud Pak for Security - argocd/operators/ibm-cp4s-operator.yaml - argocd/instances/ibm-cp4sthreatmanagements-instance.yaml ## IBM Foundational Services / Common Services - argocd/operators/ibm-foundations.yaml - argocd/instances/ibm-foundational-services-instance.yaml - argocd/operators/ibm-automation-foundation-core-operator.yaml - argocd/operators/ibm-automation-foundation-operator.yaml ## IBM Catalogs - argocd/operators/ibm-catalogs.yaml # Required for IBM MQ #- argocd/instances/openldap.yaml # Required for IBM ACE, IBM MQ #- argocd/operators/cert-manager.yaml #- argocd/instances/cert-manager-instance.yaml # Sealed Secrets #- argocd/instances/sealed-secrets.yaml # CICD #- argocd/operators/grafana-operator.yaml #- argocd/instances/grafana-instance.yaml #- argocd/instances/artifactory.yaml #- argocd/instances/chartmuseum.yaml #- argocd/instances/developer-dashboard.yaml #- argocd/instances/swaggereditor.yaml #- argocd/instances/sonarqube.yaml #- argocd/instances/pact-broker.yaml # In OCP 4.7+ we need to install openshift-pipelines and possibly privileged scc to the pipeline serviceaccount - argocd/operators/openshift-pipelines.yaml # Service Mesh #- argocd/operators/elasticsearch.yaml #- argocd/operators/jaeger.yaml #- argocd/operators/kiali.yaml #- argocd/operators/openshift-service-mesh.yaml #- argocd/instances/openshift-service-mesh-instance.yaml # Monitoring #- argocd/instances/instana-agent.yaml #- argocd/instances/instana-robot-shop.yaml # Spectrum Protect Plus #- argocd/operators/spp-catalog.yaml #- argocd/operators/spp-operator.yaml #- argocd/instances/spp-instance.yaml #- argocd/operators/oadp-operator.yaml #- argocd/instances/oadp-instance.yaml #- argocd/instances/baas-instance.yaml patches: - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=services,gitops.tier.source=git" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops-services.git - op: add path: /spec/source/targetRevision value: master - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=applications,gitops.tier.source=git" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops-apps.git - op: add path: /spec/source/targetRevision value: master - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=services,gitops.tier.source=helm" patch: |- - op: add path: /spec/source/repoURL value: https://charts.cloudnativetoolkit.dev - target: name: ibm-automation-foundation-operator patch: |- - op: add path: /spec/source/helm/parameters/- value: name: spec.channel value: v1.1Commit and push changes to your git repository:

git add . git commit -s -m "Intial boostrap setup for services" git push origin $GIT_BRANCHThe changes have now been pushed to your GitOps repository:

Enumerating objects: 11, done. Counting objects: 100% (11/11), done. Delta compression using up to 8 threads Compressing objects: 100% (6/6), done. Writing objects: 100% (6/6), 564 bytes | 564.00 KiB/s, done. Total 6 (delta 5), reused 0 (delta 0) remote: Resolving deltas: 100% (5/5), completed with 5 local objects. To https://github.com/prod-ref-guide/multi-tenancy-gitops.git b49dff5..533602c master -> masterThe intention of this operation is to indicate that we'd like the resources declared in

0-bootstrap/single-cluster/2-services/kustomization.yamlto be deployed in the cluster. Like theinfraArgoCD application, the resources created by theservicesArgoCD application will manage the Kubernetes relevant services resources applied to the cluster. -

Optional: Activate the services in the GitOps repo

Run this step if you skip installing tekton in the previous section. If you did not skip that step go to

Step 7.Access the

0-bootstrap/single-cluster/kustomization.yaml:cat 0-bootstrap/single-cluster/kustomization.yamlLet us deploy

servicesresources to the cluster. Open0-bootstrap/single-cluster/kustomization.yamland uncomment2-services/2-services.yamlas follows:resources: - 1-infra/1-infra.yaml - 2-services/2-services.yaml # - 3-apps/3-apps.yaml patches: - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=gitops" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/source/targetRevision value: master - target: group: argoproj.io kind: AppProject labelSelector: "gitops.tier.layer=infra" patch: |- - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops-infra.git - target: group: argoproj.io kind: AppProject labelSelector: "gitops.tier.layer=services" patch: |- - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops-services.git - target: group: argoproj.io kind: AppProject labelSelector: "gitops.tier.layer=applications" patch: |- - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops-apps.gitOnce we push this change to GitHub, it will be seen by the

bootstrap-single-clusterapplication in ArgoCD, and the resources it refers to will be applied to the cluster. -

Push GitOps changes to GitHub

Let’s make these GitOps changes visible to the ArgoCD

bootstrap-single-clusterapplication via GitHub.Add all changes in the current folder to a git index, commit them, and push them to GitHub:

git add . git commit -s -m "Deploying services" git push origin $GIT_BRANCHThe changes have now been pushed to your GitOps repository:

Enumerating objects: 9, done. Counting objects: 100% (9/9), done. Delta compression using up to 8 threads Compressing objects: 100% (5/5), done. Writing objects: 100% (5/5), 431 bytes | 431.00 KiB/s, done. Total 5 (delta 4), reused 0 (delta 0) remote: Resolving deltas: 100% (4/4), completed with 4 local objects. To https://github.com/prod-ref-guide/multi-tenancy-gitops.git 533602c..85a4c46 master -> masterThis change to the GitOps repository can now be used by ArgoCD.

-

The

bootstrap-single-clusterapplication detects the change and resyncsOnce these changes to our GitOps repository are seen by ArgoCD, it will resync the cluster to the desired new state.

Switch to the ArgoCD UI Applications view to see the start of this resync process:

Notice how the

bootstrap-single-clusterapplication has detected the changes and is automatically synching the cluster.(You can manually

syncthebootstrap-single-clusterArgoCD application in the UI if you don't want to wait for ArgoCD to detect the change.) -

The new ArgoCD applications

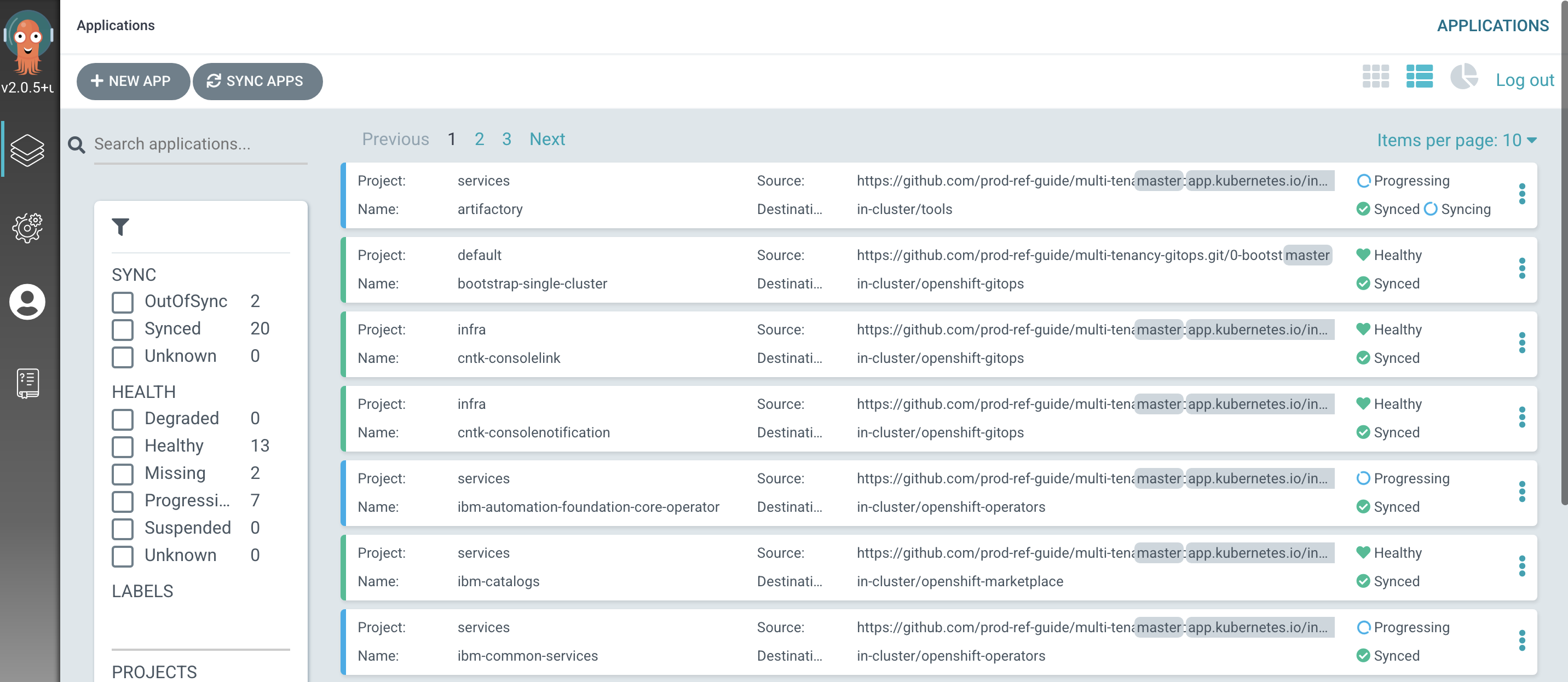

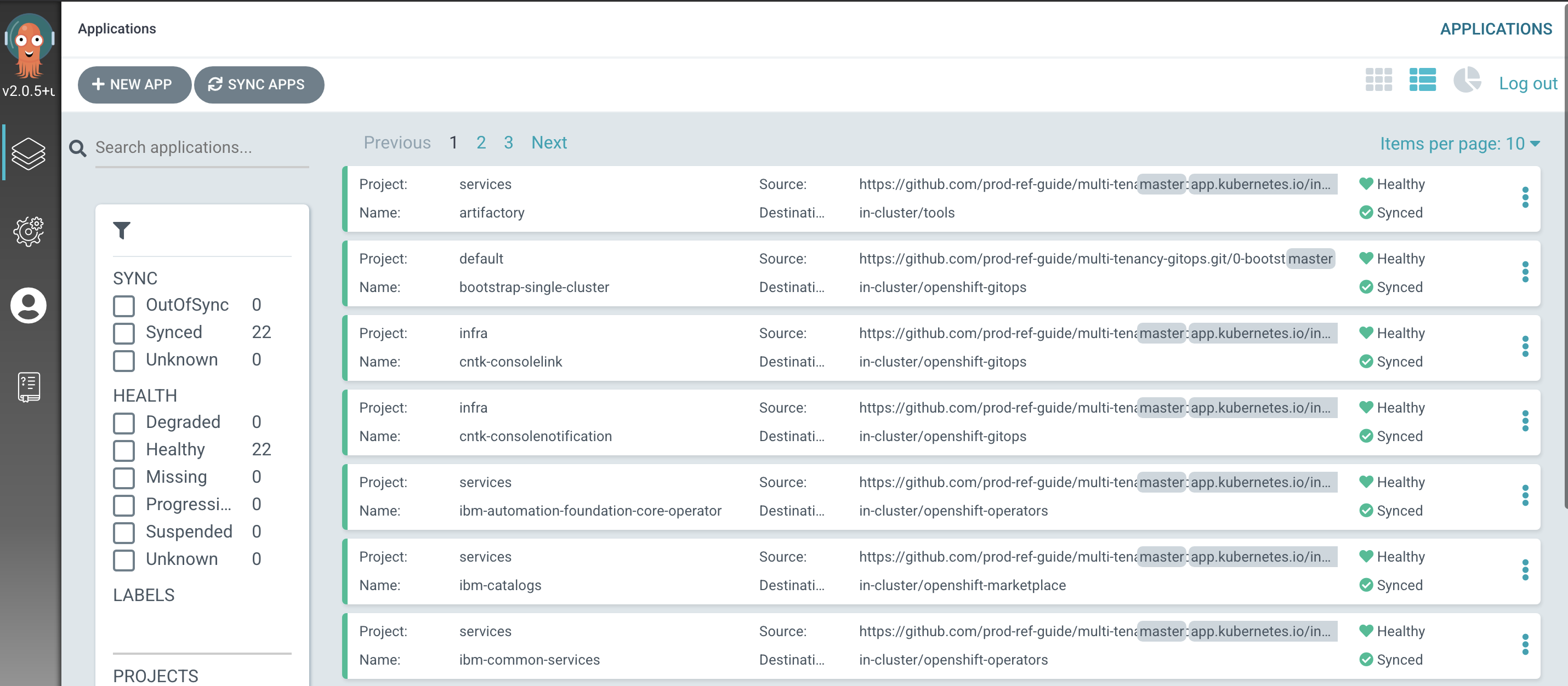

After a short while, you'll see lots of new ArgoCD applications have been created to manage the services we have deployed by modifying

kustomization.yamlunder0-bootstrap/single-cluster/2-servicesfolder:

See how most ArgoCD applications are

Syncedalmost immediately, but some spend time inProgressing. That's because theArtifactoryandSonarqubeArgoCD applications are more complex -- they create more Kubernetes resources than other ArgoCD applications and therefore take longer tosync.After a few minutes you'll see that all ArgoCD applications become

HealthyandSynced:

Notice how many more

SynchedArgoCD applications are now in the cluster; these are as a result of the newly added services layer in our architecture. -

The

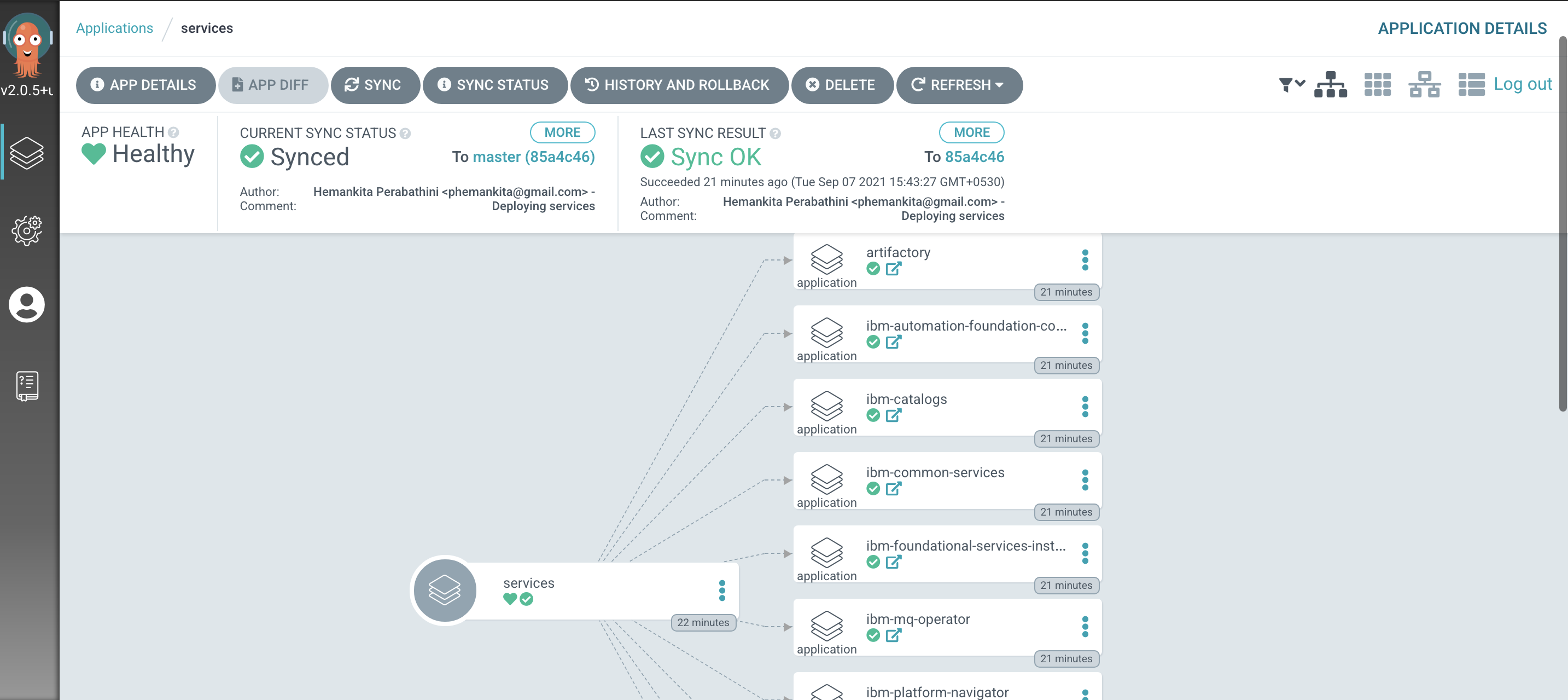

servicesapplicationLet's examine the ArgoCD application that manage the services in our reference architecture.

In the ArgoCD UI Applications view, click on the icon for the

servicesapplication:

We can see that the

servicesArgoCD application creates 10 ArgoCD applications, each of which is responsible for applying specific YAMLs to the cluster according to the folder the ArgoCD application is watching.It’s the

servicesArgoCD application that watches the0-bootstrap/single-cluster/2-services/argocdfolder for ArgoCD applications that apply service resources to our cluster. It was theservicesapplication that created theartifactoryArgoCD application which manages the Artifactory instance that is used for application configuration management that we will be exploring later in this section of the tutorial.We’ll continually reinforce these relationships as we work through the tutorial. You might like to spend some time exploring the ArgoCD UI and ArgoCD YAMLs before you proceed, though it’s not necessary, as you’ll get lots of practice as we proceed.

-

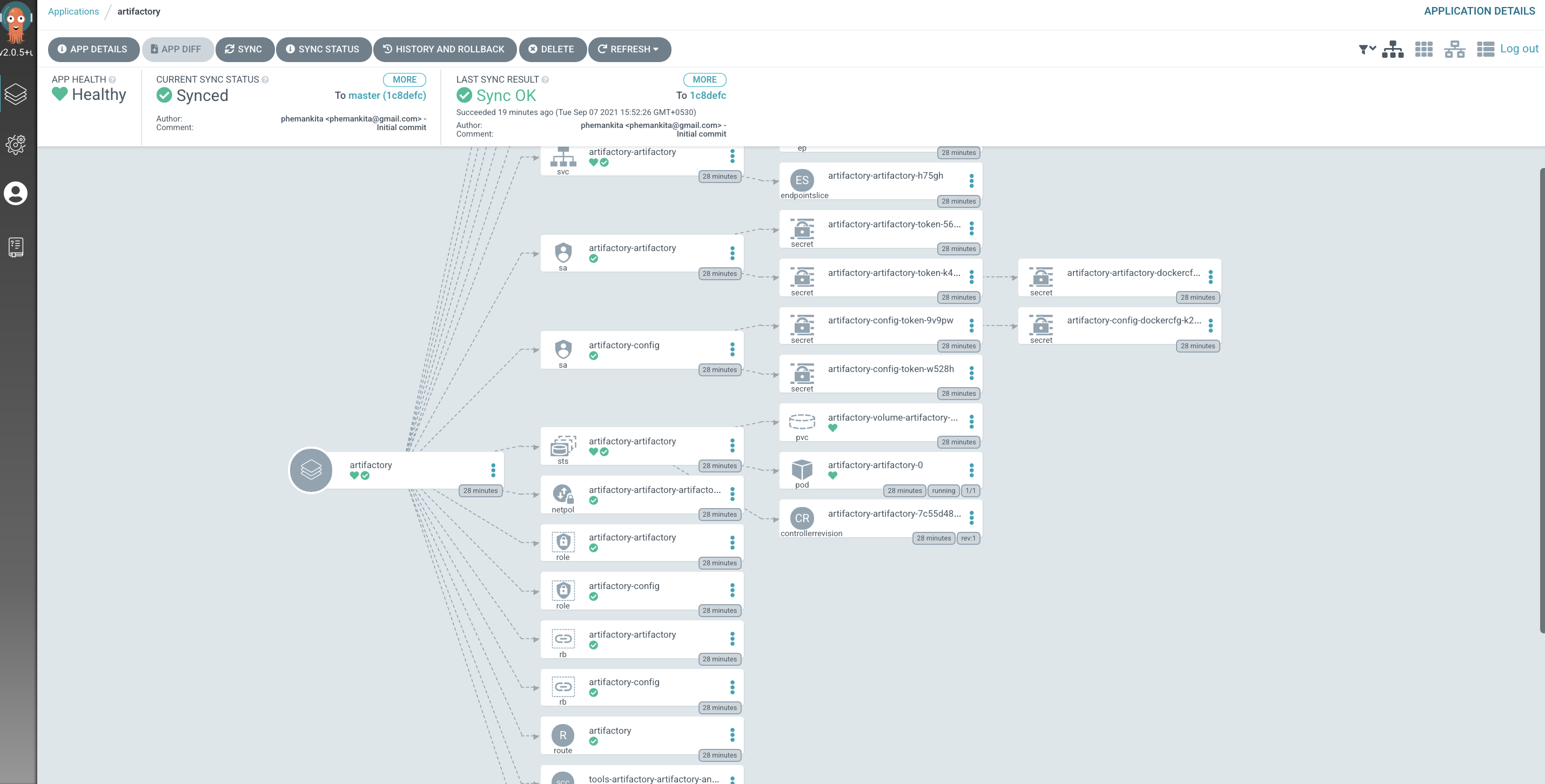

Examine

Artifactoryresources in detailYou can see the Kubernetes resources that the

ArtifactoryArgoCD application has created for Artifactory.Click on the

Artifactory:

See how many different Kubernetes resources were created when the Artifactory ArgoCD application YAML was applied to the cluster. That's why it took the

ArtifactoryArgoCD application a little time to sync.If you'd like, have a look at some of the other ArgoCD applications, such as

Sonarqubeand the Kubernetes resources they created. -

The

servicesArgoCD projectAs we've seen in the ArgoCD UI, the

servicesArgoCD application is responsible for creating the ArgoCD applications that manage the services within the cluster. Let's examine their definitions to see how they do this.Issue the following command:

cat 0-bootstrap/single-cluster/2-services/2-services.yamlThe following YAML may initially look a little intimidating; we'll discuss the major elements below:

--- apiVersion: argoproj.io/v1alpha1 kind: AppProject metadata: name: services labels: gitops.tier.layer: services spec: sourceRepos: [] # Populated by kustomize patches in 2-services/kustomization.yaml destinations: - namespace: tools server: https://kubernetes.default.svc - namespace: ibm-common-services server: https://kubernetes.default.svc - namespace: redhat-operators server: https://kubernetes.default.svc - namespace: openshift-operators server: https://kubernetes.default.svc - namespace: openshift-marketplace server: https://kubernetes.default.svc - namespace: ci server: https://kubernetes.default.svc - namespace: dev server: https://kubernetes.default.svc - namespace: staging server: https://kubernetes.default.svc - namespace: prod server: https://kubernetes.default.svc - namespace: sealed-secrets server: https://kubernetes.default.svc - namespace: istio-system server: https://kubernetes.default.svc - namespace: openldap server: https://kubernetes.default.svc - namespace: instana-agent server: https://kubernetes.default.svc - namespace: openshift-gitops server: https://kubernetes.default.svc clusterResourceWhitelist: # TODO: SCC needs to be moved to 1-infra, here for now for artifactory - group: "security.openshift.io" kind: SecurityContextConstraints - group: "console.openshift.io" kind: ConsoleLink - group: "apps" kind: statefulsets - group: "apps" kind: deployments - group: "" kind: services - group: "" kind: configmaps - group: "" kind: secrets - group: "" kind: serviceaccounts - group: "batch" kind: jobs - group: "" kind: roles - group: "route.openshift.io" kind: routes - group: "" kind: RoleBinding - group: "rbac.authorization.k8s.io" kind: ClusterRoleBinding - group: "rbac.authorization.k8s.io" kind: ClusterRole - group: apiextensions.k8s.io kind: CustomResourceDefinition roles: # A role which provides read-only access to all applications in the project - name: read-only description: Read-only privileges to my-project policies: - p, proj:my-project:read-only, applications, get, my-project/*, allow groups: - argocd-admins --- apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: services annotations: argocd.argoproj.io/sync-wave: "200" labels: gitops.tier.layer: gitops spec: destination: namespace: openshift-gitops server: https://kubernetes.default.svc project: services source: # repoURL and targetRevision populated by kustomize patches in 2-services/kustomization.yaml path: 0-bootstrap/single-cluster/2-services syncPolicy: automated: prune: true selfHeal: trueNotice how this YAML defines three ArgoCD resources: a

servicesproject which manages all the necessary services that are needed by the applications.Notice how the

destinationsfor theservicesproject are limited to theci,toolsanddevnamespaces -- as well as a few others that we'll use in the tutorial. Thesedestinationsrestrict the namespaces where ArgoCD applications in theservicesproject can manage resources.The same is true for

clusterResourceWhiteList. It limits the Kubernetes resources that can be managed toconfigmaps,deploymentsandrolebindingsamongst others.In summary, we see that the

serviceproject is used to group all the ArgoCD applications that will manage the services in our cluster. These ArgoCD applications can only perform specific actions on specific resource types in specific namespaces. See how ArgoCD is acting as a well-governed administrator. -

The similar structure of

servicesandinfraArgoCD applicationsEven though we didn't closely examine the

infraArgoCD application YAML in the previous topic, it has has a very similar structure to the ArgoCDservicesapplications we've just examined.Type the following command to list the ArgoCD

infraapp YAML.cat 0-bootstrap/single-cluster/1-infra/1-infra.yamlAgain, although this YAML might look a little intimidating, the overall structure is the same as for

services:--- apiVersion: argoproj.io/v1alpha1 kind: AppProject metadata: name: infra labels: gitops.tier.layer: infra spec: sourceRepos: [] # Populated by kustomize patches in 1-infra/kustomization.yaml destinations: - namespace: ci server: https://kubernetes.default.svc - namespace: dev server: https://kubernetes.default.svc - namespace: staging server: https://kubernetes.default.svc - namespace: prod server: https://kubernetes.default.svc - namespace: sealed-secrets server: https://kubernetes.default.svc - namespace: tools server: https://kubernetes.default.svc - namespace: ibm-common-services server: https://kubernetes.default.svc - namespace: istio-system server: https://kubernetes.default.svc - namespace: openldap server: https://kubernetes.default.svc - namespace: instana-agent server: https://kubernetes.default.svc - namespace: openshift-gitops server: https://kubernetes.default.svc clusterResourceWhitelist: - group: "" kind: Namespace - group: "" kind: RoleBinding - group: "security.openshift.io" kind: SecurityContextConstraints - group: "console.openshift.io" kind: ConsoleNotification - group: "console.openshift.io" kind: ConsoleLink roles: # A role which provides read-only access to all applications in the project - name: read-only description: Read-only privileges to my-project policies: - p, proj:my-project:read-only, applications, get, my-project/*, allow groups: - argocd-admins --- apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: infra annotations: argocd.argoproj.io/sync-wave: "100" labels: gitops.tier.layer: gitops spec: destination: namespace: openshift-gitops server: https://kubernetes.default.svc project: infra source: # repoURL and targetRevision populated by kustomize patches in 1-infra/kustomization.yaml path: 0-bootstrap/single-cluster/1-infra syncPolicy: automated: prune: true selfHeal: trueAs with the

2-services.yaml, we can see- An ArgoCD project called

infra. ArgoCD applications defined in this project will be limited by thedestinations:andclusterResourceWhitelist:specified in the YAML. - An ArgoCD app called

infra. This is the ArgoCD application that we used in the previous section of the tutorial. It watches thepath: 0-bootstrap/single-cluster/1-infrafolder for ArgoCD applications that it applied to the cluster. It was these applications that managed theci,toolsanddevnamespaces for example.

The installation of the operators will take approximately 30 - 45 minutes.

- An ArgoCD project called

Monitor your installation in the workspace¶

From this stage, the installation will take approximately 1.5 hours to complete. After you start the installation, you are brought to the Schematics workspace for your Cloud Pak. You can track progress by viewing the logs. Go to the Activity tab, and click View logs.

To further track the installation, you can monitor the status of IBM Cloud Pak® for Security Threat Management:

Log in to the OpenShift web console and ensure you are in the Administrator view. Go to Operators > Installed Operators and ensure that the Project is set to the namespace where IBM Cloud Pak® for Security was installed. In the list of installed operators, click IBM Cloud Pak for Security. On the Threat Management tab, select the threatmgmt instance. On the Details page, the following message is displayed in the Conditions section when installation is complete.

Install strategy completed with no errors

Retrieve foundational services login details¶

Use the following commands to retrieve IBM Cloud Pak foundational services hostname, default username, and password:

Hostname:

oc -n ibm-common-services get route | grep cp-console | awk '{print $2}'

oc -n ibm-common-services get secret platform-auth-idp-credentials -o jsonpath='{.data.admin_username}' | base64 --decode

oc -n ibm-common-services get secret platform-auth-idp-credentials -o jsonpath='{.data.admin_password}' | base64 --decode

These login details are required to access foundational services and configure your LDAP directory.

Next steps¶

If the adminUser you provided is a user ID that you added and authenticated by using the IBM Cloud account that is associated with the cluster and roksAuthentication was enabled, go to step 2. Otherwise, Configure LDAP authentication and ensure that the adminUser that you provided exists in the LDAP directory. Log in to Cloud Pak® for Security using the domain and the adminUser that you provided during installation. The domain, also known as application URL, can be retrieved by running the following command:

oc get route isc-route-default --no-headers -n <CP4S_NAMESPACE> | awk '{print $2}'

Select Enterprise LDAP in the login screen if you are logging in using an LDAP you connected to Foundational Services, otherwise use OpenShift Authentication if it is enabled.

Add users to Cloud Pak® for Security

Install the IBM® Security Orchestration & Automation license. If you choose Orchestration & Automation as part of your Cloud Pak® for Security bundle, you must install your Orchestration & Automation license to access the complete orchestration and automation capabilities that are provided by Orchestration & Automation.

Validation¶

-

Check the status of the

CommonServiceandPlatformNavigatorcustom resource.oc get CP4SThreatManagement threatmgmt -n tools -o jsonpath='{.status.conditions}' # Expected output = Cloudpak for Security Deployment is successful -

Before users can log in to the console for Cloud Pak for Security, an identity provider must be configured. The documentation provides further instructions. For DEMO purposes, OpenLDAP can be deployed and instructions are provided below.

-

Download the cpctl utility

- Log in to the OpenShift cluster

oc login --token=<token> --server=<openshift_url> -n <CP4S namespace> - Retrieve the pod that contains the utility

POD=$(oc get pod -n <CP4S namespace> --no-headers -lrun=cp-serviceability | cut -d' ' -f1) - Copy the utility locally

oc cp $POD:/opt/bin/<operatingsystem>/cpctl ./cpctl && chmod +x ./cpctl

- Log in to the OpenShift cluster

- Install OpenLDAP

- Start a session

./cpctl load - Install OpenLDAP

cpctl tools deploy_openldap --token $(oc whoami -t) --ldap_usernames 'adminUser,user1,user2,user3' --ldap_password cloudpak

- Start a session

- Initial user log in

- Retrieve Cloud Pak for Security Console URL

oc get route isc-route-default --no-headers -n <CP4S_NAMESPACE> | awk '{print $2}' - Log in with the user ID and password specified (ie.

adminUser/cloudpak).

- Retrieve Cloud Pak for Security Console URL

Other important ArgoCD features¶

In this final section of this chapter, let's explore ArgoCD features you may have noticed as you explored different YAML files in this chapter:

SyncWave¶

-

Using

SyncWaveto control deployment sequencingWhen ArgoCD uses a GitOps repository to apply ArgoCD applications to the cluster, it applies all ArgoCD applications concurrently. Even though Kubernetes use of eventual consistency means that resources which depend on each other can be deployed in any order, it often makes sense to help deploy certain resources in a certain order. This can avoid spurious error messages or wasted compute resources for example.

Let's compare two ArgoCD YAML files.

Firstly, let's examine the

namespace-toolsin the infrastructure layer:cat 0-bootstrap/single-cluster/1-infra/argocd/namespace-tools.yamlwhich shows the ArgoCD application YAML:

apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: namespace-tools labels: gitops.tier.layer: infra annotations: argocd.argoproj.io/sync-wave: "100" ...Then examine the

sonarqubein the services layer:cat 0-bootstrap/single-cluster/2-services/argocd/instances/sonarqube.yamlwhich shows the ArgoCD application YAML:

apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: sonarqube annotations: argocd.argoproj.io/sync-wave: "250" labels: gitops.tier.group: cntk gitops.tier.layer: services gitops.tier.source: git finalizers: - resources-finalizer.argocd.argoproj.io ...Notice the use of

sync-wave: "100"fornamespace-toolsand how it contrasts withsync-wave: "250"forsonarqube. The lower100will be deployed before the higher sync-wave250because it only makes sense to deploy the pipelines into thetoolsnamespace, after thetoolsnamespace has been created.You are free to choose any number for

sync-wave. In our deployment, we have chosen100-199for infrastructure,200-299for services and300-399for applications; it provides alignment with high level folder numbering such as1-infraand so on.In our tutorial, we incrementally add infrastructure, services and applications so that you can understand how everything fits together incrementally. This makes

sync-waveless important than in a real-world system, where you might be making updates to each of these deployed layers simultaneously. In such cases the use ofsync-waveprovides a high degree of confidence in the effectiveness of a deployment change because all changes go through in the correct order.

Congratulations!

You've installed tekton and configured the key services in the cluster to support continuous integration and continuous delivery. We installed them into the tools namespaces we created previously.

This chapter is now complete.