Configuring the cluster infrastructure¶

Audience: Architects, Application developers, Administrators

Timing: 60 minutes

Overview¶

In this topic, we're going to:

- Explore the sample GitOps repository in a little more detail

- Customize the GitOps repository for our cluster

- Connect ArgoCD to the customized GitOps repository

- Bootstrap the cluster

- Explore how the

cinamespace was created - Try out some dynamic changes to the cluster with ArgoCD

- Explore how ArgoCD manages configuration drift

By the end of this topic we'll have a cluster up and running, having used GitOps to do it. We'll fully understand how ArgoCD manages cluster change and configuration drift.

Introduction¶

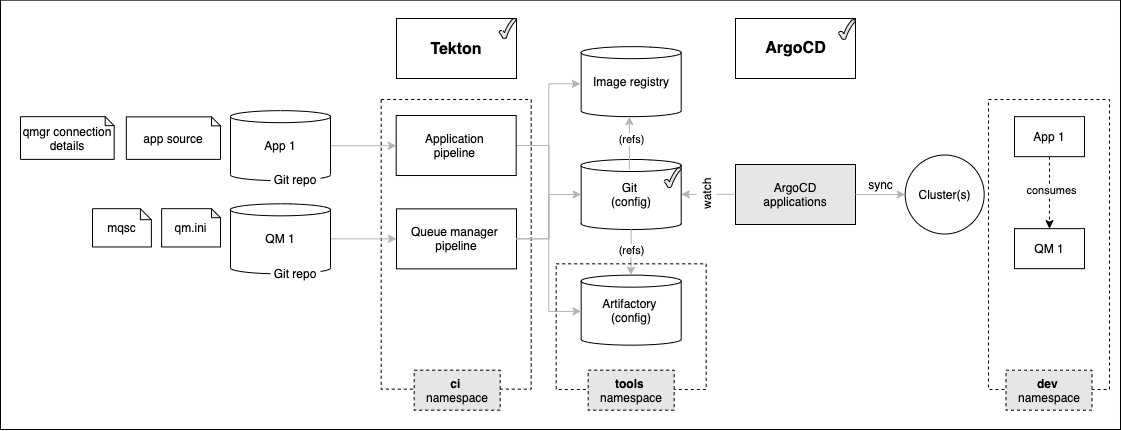

In the cluster configuration topic of this tutorial, we installed the fundamental components that enabled continuous integration and continuous deployment. These components included a sample GitOps repository and ArgoCD.

In this topic we're going to customize the GitOps repositories, and point ArgoCD at it. ArgoCD will then install the highlighted infrastructure components that are used by our MQ CICD process:

We'll examine these components in more detail throughout this section of the tutorial; here's an initial overview of their function:

ci-namespaceprovide an execution namespace for our pipelinestools-namespaceprovide an execution namespace for tools such as Artifactorydev-namespaceprovide an execution namespace for our deployed MQ applications and queue managers when running in the development environment. Later in the tutorial, we'll addstagingandprodnamespaces.ArgoCD applicationsmanage theci,toolsanddevnamespaces.

You may have already noticed that the multi-tenancy-gitops repository contains YAMLs that refer to the ArgoCD and namespaces in the diagram above. We're now going to use these YAMLs to configure the cluster resources using GitOps. Becoming comfortable with the concepts by practicing GitOps will help us in later chapters when we create MQ applications and queue managers using the same approach.

Pre-requisites¶

Before attempting this topic, you must have completed the following tasks:

- You have completed the previous sections of this tutorial.

- You have installed all the command line tools.

See these instructions about how install these prerequisites.

Video walkthrough¶

The following video walkthrough takes you through this topic step by step:

Click to watch the video

The sample GitOps repository¶

Let's understand how GitOps works in practice by using it to install the components we've highlighted in the above diagram.

Let's first look at the high level structure of the multi-tenancy-gitops GitOps repository.

-

Ensure you're logged in to the cluster

Tip

Ensure you're in the terminal window that you used to set up your cluster, i.e. in the

multi-tenancy-gitopssubfolder.Log into your OCP cluster, substituting the

--tokenand--serverparameters with your values:oc login --token=<token> --server=<server>If you are unsure of these values, click your user ID in the OpenShift web console and select "Copy Login Command".

-

Locate your GitOps repository

If necessary, change to the root of your GitOps repository, which is stored in the

$GIT_ROOTenvironment variable.Issue the following command to change to your GitOps repository:

cd $GIT_ROOT cd multi-tenancy-gitops -

Explore the high level folder structure

Use the following command to display the folder structure:

tree . -d -L 2We can see the different folders in the GitOps repository:

. ├── 0-bootstrap │ ├── others │ └── single-cluster ├── doc │ ├── diagrams │ ├── experimental │ ├── images │ └── scenarios ├── scripts │ └── bom └── setup ├── ocp47 └── ocp4xThe

0-bootstrapfolder is the key folder that contains different profiles for different cluster topologies. A Cluster Profile such assingle-clusterwill control resources are deployed to a single cluster. We'll be using thesingle-clusterprofile, although you can see that other profiles are available.There are other folders containing utility scripts and some documentation; we'll explore these later.

-

The

0-bootstrapfolderThe process of installing components into a cluster is called bootstrapping because it's the first thing that happens to a cluster after it has been created. We will bootstrap our cluster using the

0-bootstrapfolder in themulti-tenancyrepository.Let us examine the

0-bootstrapfolder structure:tree ./0-bootstrap/ -d -L 2We can see the different folders:

./0-bootstrap/ ├── others │ ├── 1-shared-cluster │ ├── 2-isolated-cluster │ └── 3-multi-cluster └── single-cluster ├── 1-infra ├── 2-services └── 3-appsNotice the different cluster profiles. We're going to use the

single-clusterprofile. See how this profile has three sub-folders corresponding to three layers of components: infrastructure, services and applications. Every component in our architecture will be in one of these layers. -

The

single-clusterprofile in more detailUse the following command to display

single-clusterfolder in more detail:tree ./0-bootstrap/single-cluster/ -L 2We can see the different folders:

./0-bootstrap/single-cluster/ ├── 1-infra │ ├── 1-infra.yaml │ ├── argocd │ └── kustomization.yaml ├── 2-services │ ├── 2-services.yaml │ ├── argocd │ └── kustomization.yaml ├── 3-apps │ ├── 3-apps.yaml │ ├── argocd │ └── kustomization.yaml ├── bootstrap.yaml └── kustomization.yamlAgain, see the different layers of the architecture: infrastructure, service and application.

Notice how each of these high level folders (

1-infra,2-services,3-applications) has anargocdfolder. Theseargocdfolders contain the ArgoCD applications that control which resources in that architectural layer are deployed to the cluster. -

ArgoCD applications

Later in this tutorial, we'll see in detail how these ArgoCD applications work. For now, let's explore the range of ArgoCD applications that control the infrastructure components deployed to the cluster.

Type the following command :

tree 0-bootstrap/single-cluster/1-infra/It shows a list of ArgoCD applications that are used to manage Kubernetes infrastructure resources:

0-bootstrap/single-cluster/1-infra/ ├── 1-infra.yaml ├── argocd │ ├── consolelink.yaml │ ├── consolenotification.yaml │ ├── infraconfig.yaml │ ├── machinesets.yaml │ ├── namespace-baas.yaml │ ├── namespace-ci.yaml │ ├── namespace-cloudpak.yaml │ ├── namespace-db2.yaml │ ├── namespace-dev.yaml │ ├── namespace-ibm-common-services.yaml │ ├── namespace-instana-agent.yaml │ ├── namespace-istio-system.yaml │ ├── namespace-mq.yaml │ ├── namespace-openldap.yaml │ ├── namespace-openshift-storage.yaml │ ├── namespace-prod.yaml │ ├── namespace-robot-shop.yaml │ ├── namespace-sealed-secrets.yaml │ ├── namespace-spp-velero.yaml │ ├── namespace-spp.yaml │ ├── namespace-staging.yaml │ ├── namespace-tools.yaml │ ├── scc-wkc-iis.yaml │ ├── serviceaccounts-db2.yaml │ ├── serviceaccounts-ibm-common-services.yaml │ ├── serviceaccounts-mq.yaml │ ├── serviceaccounts-tools.yaml │ └── storage.yaml └── kustomization.yamlNotice the many

namespace-YAMLs; we'll see in a moment how these each define an ArgoCD application dedicated to managing a Kubernetes namespace in our cluster. theserviceaccount-YAMLs can similarly manage service accounts.There are similar ArgoCD applications for the service and application layers. Feel free to examine their corresponding folders; we will look at them in more detail later.

-

A word on terminology

As we get started with ArgoCD, it can be easy to confuse the term ArgoCD application with your application. That's because ArgoCD uses the term application to refer to a Kubernetes custom resource that was initially designed to manage a set of application resources. However, an ArgoCD application can automate the deployment of any Kubernetes resource within a cluster, such as a namespace, as we'll see a little later.

Why customize¶

We're now going to customize two of the sample GitOps repositories to enable them to be used by ArgoCD to deploy Kubernetes resources to your particular cluster. The process of customization is important to understand; we will use it throughout the tutorial.

Recall the different roles of the four repositories:

multi-tenancy-gitopsis the main GitOps repository. It contains the ArgoCD YAMLs for the fixed components in the cluster such as a namespace or a Tekton or SonarQube instance.multi-tenancy-gitops-infrais a library repository. It contains infrastructure YAMLs referred to by the ArgoCD YAMLs that manage infrastructure in the cluster, such as a namespace.multi-tenancy-gitops-servicesis a library repository. It contains the service YAMLs referred to the ArgoCD YAMLs that manage services in the cluster such as Tekton or SonarQube.multi-tenancy-gitops-appsis a GitOps repository containing the user application components that are deployed to the cluster. These components include applications, databases and queue managers, for example.

It is the multi-tenancy-gitops and multi-tenancy-gitops-apps repositories that need to be customized for your cluster. The other two repositories don't need to be customized because they perform the function of a library.

Tailor multi-tenancy-gitops¶

Let now customize the multi-tenancy-gitops repository for your organization. The repository provides a script that uses the $GIT_ORG environment variable to replace the generic values in the cloned repository with those of your GitHub organization.

Once customized, we'll push our updated repository back to GitHub where it can be accessed by ArgoCD.

-

Set your git branch

In this tutorial, we use the

masterbranch of themulti-tenancy-gitopsrepository. We will store this in the$GIT_BRANCHenvironment variable for use by various scripts and commands.Issue the following command to set its value:

export GIT_BRANCH=masterYou can verify your

$GIT_BRANCHas follows:echo $GIT_BRANCH -

Run the customization script

We customize the cloned

multi-tenancy-gitopsrepository to the relevant values for our cluster using theset-git-source.shscript. This script will replace various YAML elements in this repository to refer to your git organization via a GitHub URL.Now run the script:

./scripts/set-git-source.shThe script will list customizations it will use and all the files that it customizes:

Setting kustomization patches to https://github.com/prod-ref-guide/multi-tenancy-gitops.git on branch master Setting kustomization patches to https://github.com/prod-ref-guide/multi-tenancy-gitops-infra.git on branch master Setting kustomization patches to https://github.com/prod-ref-guide/multi-tenancy-gitops-services.git on branch master Setting kustomization patches to https://github.com/prod-ref-guide/multi-tenancy-gitops-apps.git on branch master done replacing variables in kustomization.yaml files git commit and push changes now -

Explore the customization changes

You can easily identify all the files that have been customized using the

git statuscommand.Issue the following command:

git statusto view the complete set of changed files in the

multi-tenancy-gitopsrepository:On branch master Your branch is up to date with 'origin/master'. Changes not staged for commit: (use "git add <file>..." to update what will be committed) (use "git restore <file>..." to discard changes in working directory) modified: 0-bootstrap/bootstrap.yaml modified: 0-bootstrap/others/1-shared-cluster/bootstrap-cluster-1-cicd-dev-stage-prod.yaml modified: 0-bootstrap/others/1-shared-cluster/bootstrap-cluster-n-prod.yaml ... modified: 0-bootstrap/single-cluster/kustomization.yaml(We've abbreviated the list.)

You can see the kind of changes that the script has made with

git diffcommand:git diff 0-bootstrap/bootstrap.yamlwhich calculates the differences in the file as:

diff --git a/0-bootstrap/bootstrap.yaml b/0-bootstrap/bootstrap.yaml index 0754133..7303c53 100644 --- a/0-bootstrap/bootstrap.yaml +++ b/0-bootstrap/bootstrap.yaml @@ -10,8 +10,8 @@ spec: project: default source: path: 0-bootstrap/single-cluster - repoURL: ${GIT_BASEURL}/${GIT_ORG}/${GIT_GITOPS} - targetRevision: ${GIT_GITOPS_BRANCH} + repoURL: https://github.com/tutorial-org-123/multi-tenancy-gitops.git + targetRevision: master syncPolicy: automated: prune: trueSee how the

repoURLandtargetRevisionYAML elements now refer to your organization in GitHub rather than a generic name.By making YAMLs like this active in your cluster, ArgoCD is able to refer to your repository to manage its contents.

-

Add the changes to a git index, ready to push to GitHub

Now that we've customized the local clone of the

multi-tenancy-gitopsrepository, we should commit the changes, and push them back to GitHub where they can be accessed by ArgoCD.Firstly, we the changes to a git index:

git add . -

Commit the changes to git

We then commit these changes to git index:

git commit -s -m "GitOps customizations for organization and cluster"which will show the commit message:

[master a900c39] GitOps customizations for organization and cluster 46 files changed, 176 insertions(+), 176 deletions(-) -

Push changes to GitHub

Finally, we push this commit back to the

masterbranch on GitHub:git push origin $GIT_BRANCHwhich shows that the changes have now been pushed to your GitOps repository:

Enumerating objects: 90, done. Counting objects: 100% (90/90), done. Delta compression using up to 8 threads Compressing objects: 100% (47/47), done. Writing objects: 100% (48/48), 4.70 KiB | 1.57 MiB/s, done. Total 48 (delta 32), reused 0 (delta 0) remote: Resolving deltas: 100% (32/32), completed with 23 local objects. To https://github.com/tutorial-org-123/multi-tenancy-gitops.git d95eca5..a900c39 master -> masterWe've now customized the

multi-tenancy-gitopsrepository for your organization, and successfully pushed it to GitHub.

Connect ArgoCD¶

Let's now connect your customized GitOps repository to the instance of ArgoCD running in the cluster. Once connected, ArgoCD will use the contents of this repository to create matching resources in the cluster. It will also keep the cluster synchronized with any changes to the GitOps repository.

-

Review the

bootstrap-single-clusterArgoCD applicationRecall that you pushed the customized local copy of the GitOps repository to your GitHub account. The repository contains a

bootstrap-single-clusterArgoCD application that, when deployed to the cluster, will continuously watch this repository and use its contents to synchronize the cluster.The

0-bootstrap/single-cluster/bootstrap.yamlfile is used to create our first ArgoCD application calledbootstrap-single-cluster. This initial ArgoCD application effectively bootstraps the cluster by create all the other ArgoCD applications that control the infrastructure, service and application resources deployed to the cluster.Examine the YAML that defines the ArgoCD bootstrap application:

cat 0-bootstrap/single-cluster/bootstrap.yamlapiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: bootstrap-single-cluster namespace: openshift-gitops spec: destination: namespace: openshift-gitops server: https://kubernetes.default.svc project: default source: path: 0-bootstrap/single-cluster repoURL: https://github.com/tutorial-org-123/multi-tenancy-gitops.git targetRevision: master syncPolicy: automated: prune: true selfHeal: trueNotice again how this ArgoCD application has been customized to use your GitOps repository

repoURL: https://github.com/tutorial-org-123/multi-tenancy-gitops.git.Within this repository, the folder

path: 0-bootstrap/single-clusterrefers to a folder that contains all the other ArgoCD applications that are used to manage cluster resources.By deploying this ArgoCD application to a cluster, we are effectively linking our GitOps repository to that cluster.

-

Apply ArgoCD

bootstrap.yamlto the clusterLet's now deploy the

bootstrap-single-clusterArgoCD application to bootstrap the cluster.Issue the following command to apply the bootstrap YAML to the cluster:

oc apply -f 0-bootstrap/single-cluster/bootstrap.yamlKubernetes will confirm that the

bootstrap-single-clusterresource has been created:application.argoproj.io/bootstrap-single-cluster createdNotice that:

-

application.argoproj.ioindicates that this is an ArgoCD application. -

The

bootstrap-single-clusterArgoCD application is now watching the0-bootstrap/single-clusterfolder in ourmulti-tenancy-gitopsrepository on GitHub. -

This is the only direct cluster operation we need to perform; from now on, all cluster operations will be performed via Git operations to this repository.

-

-

Verify the bootstrap deployment

We can use the command line to verify that the bootstrap ArgoCD application is running.

Issue the the following command:

oc get app/bootstrap-single-cluster -n openshift-gitopsYou should see that the bootstrap application was recently updated:

NAME SYNC STATUS HEALTH STATUS bootstrap-single-cluster Synced HealthySYNC STATUSmay temporarily showOutOfSyncorHEALTH_STATUSmay temporarily showMissing; simply re-issue the command to confirm it moves toSyncedandHealthy. -

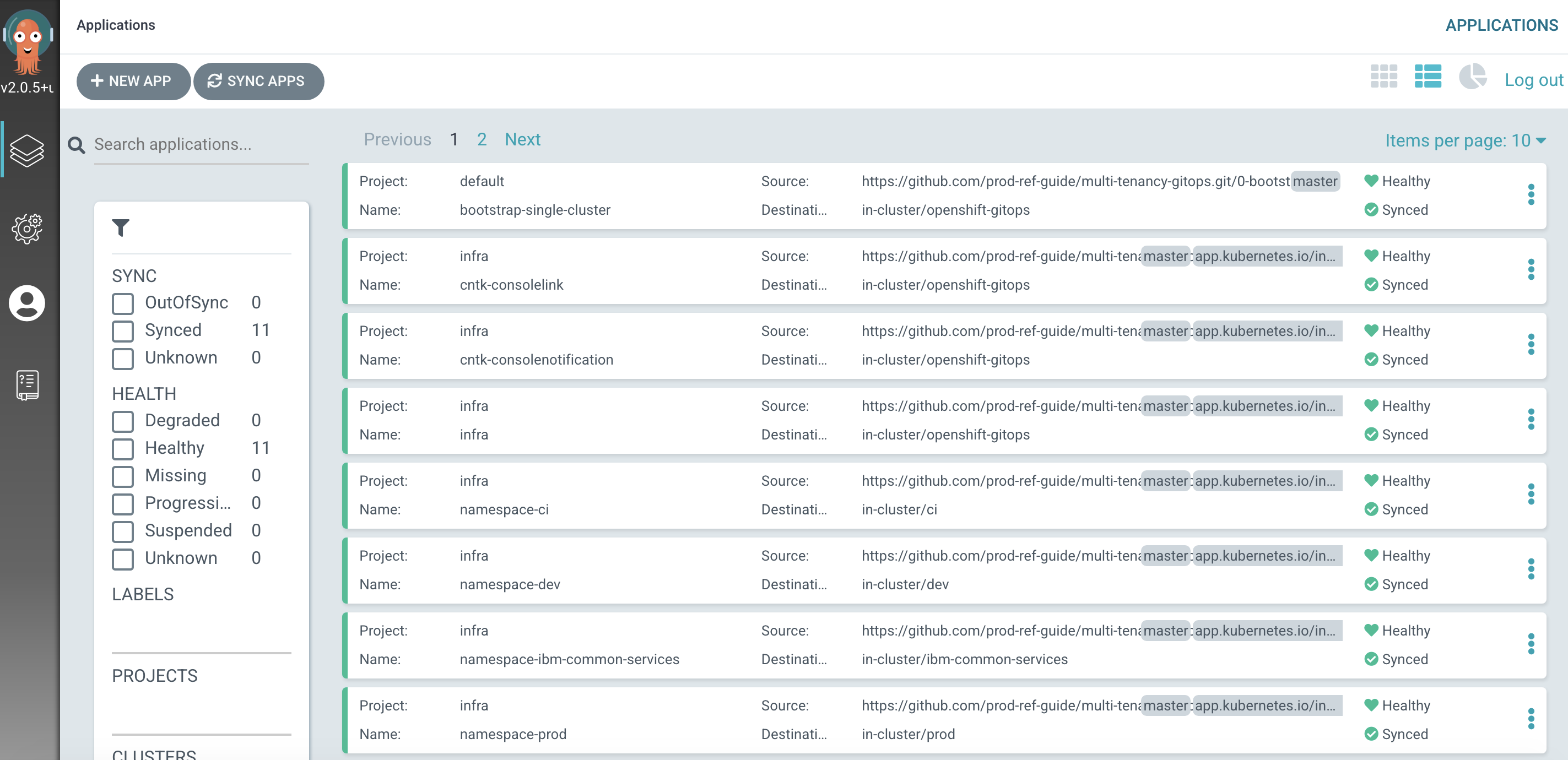

Using the UI to view the newly deployed ArgoCD applications

We can also use the ArgoCD web console to view the Kubernetes resources deployed to the cluster. Switch back to the web console, refresh the page and you should see the

bootstrap-single-clusterArgoCD application together with three other ArgoCD applications:

(You may need to select

Listview rather than theTilesview.)We can see that four ArgoCD applications have been deployed to the cluster as a result of applying

bootstrap.yaml.The first ArgoCD application is the

bootstrap-single-clusterArgoCD application. It has created three other ArgoCD applications:infrawatches the for infrastructure components to be synchronized with the cluster.serviceswatches for service components to be synchronized with the cluster.applicationswatches for application components to be synchronized with the cluster.

As resources are added, updated or removed to the infrastructure, service and application folders watched by these applications, the cluster will be kept synchronized with the contents of the corresponding folder.

We'll see how these applications work in more detail a little later.

-

The

bootstrap-single-clusterArgoCD application in more detailIn the ArgoCD UI Applications view, click on the

bootstrap-single-clusterapplication:

You can see the bootstrap application creates two types of Kubernetes resources, namely ArgoCD

applicationsand ArgoCDprojectsforinfra,servicesandapplications.An ArgoCD project is a mechanism by which we can group related resources; we keep all our ArgoCD applications that manage infrastructure in the

infraproject, all applications that manage services in the aservicesproject and so on.

Customizing the bootstrap¶

Now that the cluster is using your multi-tenancy-gitops repository to synchronize the cluster, we can update this repository with some infrastructure components that we'd like to have deployed to the cluster.

This tutorial uses Kustomize to tailor which resources are deployed to your cluster. We're going to learn a lot about Kustomize by using different kustomization.yaml files to tailor bootstrap, infrastructure, service and application components throughout the tutorial. If you're interested, you can learn also more about Kustomize via these examples

-

Understanding

bootstrap-single-clustercustomizationThe bootstrap application we deployed was customized using its

0-bootstrap/single-cluster/kustomization.yamlfile.Issue the following command:

cat 0-bootstrap/single-cluster/kustomization.yamlto show which customizations have been applied:

resources: - 1-infra/1-infra.yaml - 2-services/2-services.yaml - 3-apps/3-apps.yaml patches: - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=gitops" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/source/targetRevision value: master - target: group: argoproj.io kind: AppProject labelSelector: "gitops.tier.layer=infra" patch: |- - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops-infra.git - target: group: argoproj.io kind: AppProject labelSelector: "gitops.tier.layer=services" patch: |- - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops-services.git - target: group: argoproj.io kind: AppProject labelSelector: "gitops.tier.layer=applications" patch: |- - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops.git - op: add path: /spec/sourceRepos/- value: https://github.com/prod-ref-guide/multi-tenancy-gitops-apps.gitNotice that:

- the

resources:element identifies three resources are to be customized:1-infra/1-infra.yamlcorresponds to theinfraArgoCD application that manages infrastructure components.2-services/2-services.yamlcorresponds to theservicesArgoCD application that manages services components.3-apps/3-apps.yamlcorresponds to theapplicationsArgoCD application that manages application components.

- the

patches:element lists the full set of customization patches:- each

patch:identifies atarget:resource and its correspondingpatch: - each

target:identifies a resource using agroup:,kind:andlabelSelector: - each

patch:specifies the change such asop: addto addpathand value elements to the identified target

- each

Feel free to examine these resources in your local clone, GitHub and/or the ArgoCD web UI.

- the

-

Customizing the bootstrap application

Let's modify this

0-bootstrap/single-cluster/kustomization.yamlto deactivate the ArgoCD applications that manage the services and application components deployed to the cluster -- so that ArgoCD is only watching for infrastructure updates.Open

0-bootstrap/single-cluster/kustomization.yamland comment out the2-services/2-services.yamland3-apps/3-apps.yamlas follows:resources: - 1-infra/1-infra.yaml #- 2-services/2-services.yaml #- 3-apps/3-apps.yaml patches: - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=gitops"Notice how the services and applications ArgoCD applications are no longer resources that are in scope. Now commit and push changes to your git repository:

git add . git commit -s -m "Using only infrastructure resources" git push origin $GIT_BRANCHwhich shows that the changes have been made active on GitHub:

Enumerating objects: 9, done. Counting objects: 100% (9/9), done. Delta compression using up to 8 threads Compressing objects: 100% (5/5), done. Writing objects: 100% (5/5), 456 bytes | 456.00 KiB/s, done. Total 5 (delta 4), reused 0 (delta 0) remote: Resolving deltas: 100% (4/4), completed with 4 local objects. To https://github.com/tutorial-org-123/multi-tenancy-gitops.git e3f696d..ea3b43f master -> masterThese changes will now be seen by the ArgoCD

bootstrap-single-clusterapplication. -

The updated cluster

Let's confirm the effect of this change in the cluster. The

bootstrap-single-clusterapplication is watching the GitOps repository, and when it notices the change, it will re-apply it to the cluster.Switch back to the ArgoCD web console, refresh the page and you should see the following:

(You may need to wait a few minutes for ArgoCD to detect the change. If you've authenticated as an

admin, you can manuallyRefreshandSyncthe bootstrap-single-cluster` application to force the detection.)Notice how the

servicesandapplicationsArgoCD applications have been removed from the cluster.

Add infrastructure¶

Let's now add some infrastructure components, such as namespaces and service accounts to the cluster. Again, we'll do this using a kustomization.yaml file, but this time for the infra application rather than the bootstrap-single-cluster application.

-

Understanding

infracustomizationLet's examine the

0-bootstrap/single-cluster/1-infra/kustomization.yamlthat controls how your cluster infrastructure components are tailored for deployment.Issue the following command:

cat 0-bootstrap/single-cluster/1-infra/kustomization.yamlto show the

kustomization.yamlfile that defines the infrastructure components deployed to the cluster:resources: #- argocd/consolelink.yaml #- argocd/consolenotification.yaml #- argocd/namespace-ibm-common-services.yaml #- argocd/namespace-ci.yaml #- argocd/namespace-dev.yaml #- argocd/namespace-staging.yaml #- argocd/namespace-prod.yaml #- argocd/namespace-cloudpak.yaml #- argocd/namespace-istio-system.yaml #- argocd/namespace-openldap.yaml #- argocd/namespace-sealed-secrets.yaml #- argocd/namespace-tools.yaml #- argocd/namespace-instana-agent.yaml #- argocd/namespace-robot-shop.yaml #- argocd/namespace-openshift-storage.yaml #- argocd/namespace-spp.yaml #- argocd/namespace-spp-velero.yaml #- argocd/namespace-baas.yaml #- argocd/serviceaccounts-tools.yaml #- argocd/storage.yaml #- argocd/infraconfig.yaml #- argocd/machinesets.yaml patches: - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=infra" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops-infra.git - op: add path: /spec/source/targetRevision value: masterNotice how all the ArgoCD YAMLs that control resources (e.g. namespaces) are commented out; these resources will not be applied to the cluster; the resources not active.

-

Deploying infrastructure resources with

/1-infra/kustomization.yamlWe can deploy infrastructure resource to the cluster by un-commenting them and pushing that change to our GitOps repository on GitHub where it will be seen by the

infraArgoCD application.Open

0-bootstrap/single-cluster/1-infra/kustomization.yamland uncomment the below resources:argocd/consolelink.yaml argocd/consolenotification.yaml argocd/namespace-ibm-common-services.yaml argocd/namespace-ci.yaml argocd/namespace-dev.yaml argocd/namespace-staging.yaml argocd/namespace-prod.yaml argocd/namespace-sealed-secrets.yaml argocd/namespace-tools.yamlYour

kustomization.yamlfile will now look like this:resources: - argocd/consolelink.yaml - argocd/consolenotification.yaml - argocd/namespace-ibm-common-services.yaml - argocd/namespace-ci.yaml - argocd/namespace-dev.yaml - argocd/namespace-staging.yaml - argocd/namespace-prod.yaml #- argocd/namespace-cloudpak.yaml #- argocd/namespace-istio-system.yaml #- argocd/namespace-openldap.yaml - argocd/namespace-sealed-secrets.yaml - argocd/namespace-tools.yaml #- argocd/namespace-instana-agent.yaml #- argocd/namespace-robot-shop.yaml #- argocd/namespace-openshift-storage.yaml #- argocd/namespace-spp.yaml #- argocd/namespace-spp-velero.yaml #- argocd/namespace-baas.yaml #- argocd/serviceaccounts-tools.yaml #- argocd/storage.yaml #- argocd/infraconfig.yaml #- argocd/machinesets.yaml patches: - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=infra" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/prod-ref-guide/multi-tenancy-gitops-infra.git - op: add path: /spec/source/targetRevision value: masterCommit and push changes to your git repository:

git add . git commit -s -m "Add infrastructure resources" git push origin $GIT_BRANCHThe changes have now been pushed to your GitOps repository:

Enumerating objects: 11, done. Counting objects: 100% (11/11), done. Delta compression using up to 8 threads Compressing objects: 100% (6/6), done. Writing objects: 100% (6/6), 576 bytes | 576.00 KiB/s, done. Total 6 (delta 5), reused 0 (delta 0) remote: Resolving deltas: 100% (5/5), completed with 5 local objects. To https://github.com/tutorial-org-123/multi-tenancy-gitops.git a900c39..e3f696d master -> master -

The new infrastructure resources

Again, let's confirm the effect of this change in the cluster. The

infraapplication is watching the GitOps repository, and when it notices the change, it will re-apply it to the cluster.Switch back to the ArgoCD web console, refresh the page and you should see the following:

(You may need to wait a few minutes for ArgoCD to detect the change. If you've authenticated as an

admin, you can manuallyRefreshandSynctheinfraapplication to force the detection.)Notice how many new ArgoCD applications have been added to the cluster. Each of these is managing a specific Kubernetes resource; for example the

namespace-ciArgoCD application is managing thecinamespace in the cluster. -

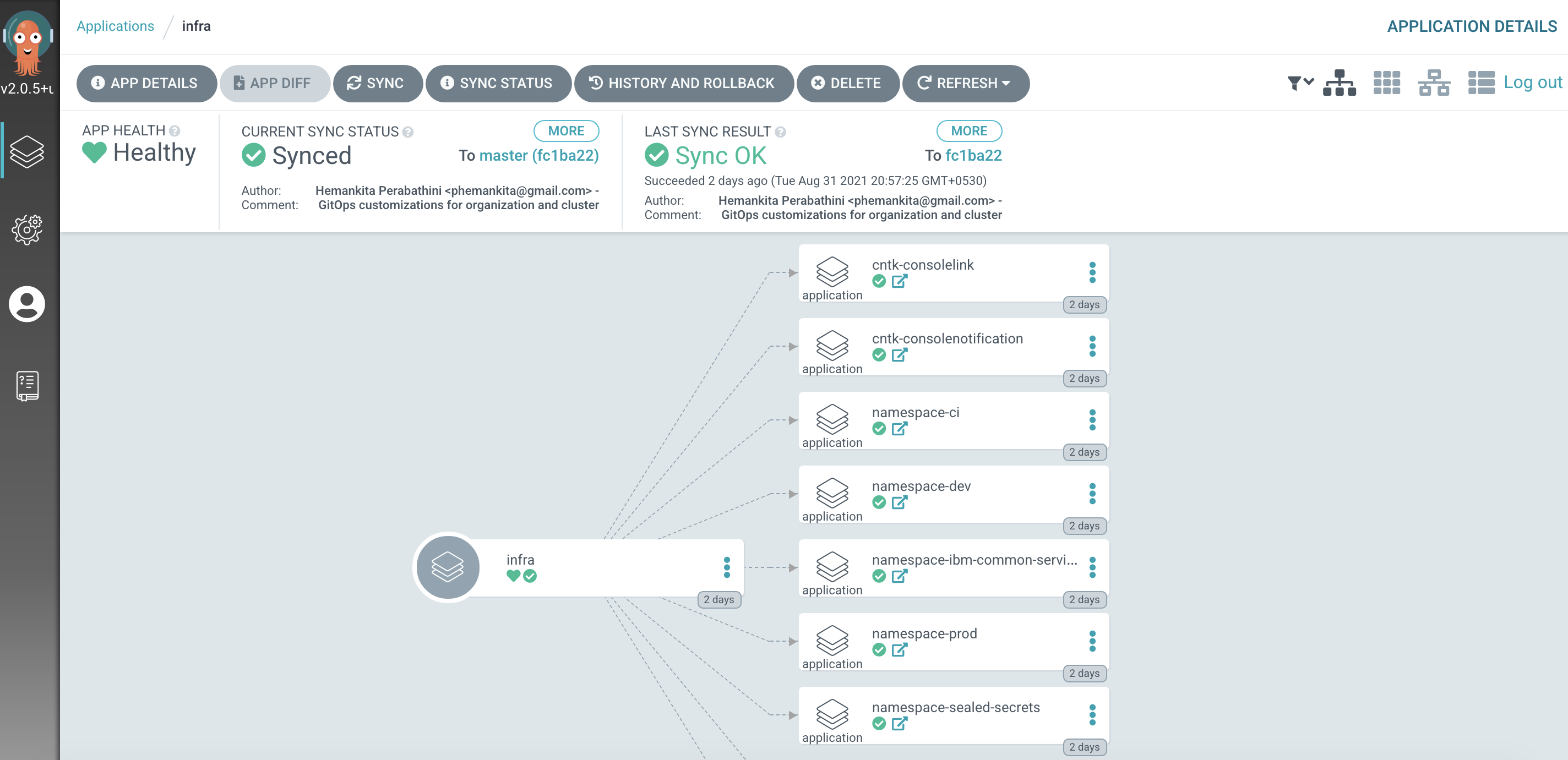

The

infraArgoCD application in more detailLet's examine the

infraArgoCD application in more detail to see how it works.In the ArgoCD UI Applications view, click on the

open applicationicon for theinfraapplication:

The

infraArgoCD application continuously watches the0-bootstrap/single-cluster/1-infra/argocdfolder for ArgoCD applications that apply infrastructure resources to our cluster.We can see that it has created 9 ArgoCD applications corresponding to the 9 changes in the

kustomization.yamlfile. Each of these ArgoCD applications is responsible managing a specific infrastructure resource defined by a specific YAML. For example, thenamespace-ciArgoCD application manages thecinamespace.We'll continually reinforce these relationships as we work through the tutorial. You might like to spend some time exploring the ArgoCD UI and ArgoCD YAMLs before you proceed; though it's not necessary, as you'll get lots of practice as we proceed.

Understanding Kustomize¶

We've seen how the bootstrap-single-cluster, infra, services and applications ArgoCD applications use Kustomize to control the hierarchy of ArgoCD applications that manage resources deployed to the cluster.

Let's now complement these high level applications by spending a little time examining how ArgoCD application that manages a specific infrastructure resource -- the ci namespace. You may recall that the ci namespace is where Tekton pipelines will run; this namespace provides a dedicated environment for continuous integration work. Let's see how this namespace is managed.

-

The resources managed by the

namespace-ciArgoCD applicationLet's examine the Kubernetes resources applied to the cluster by the

namespace-ciArgoCD application.In the ArgoCD application list, click on

namespace-ci:

Tip

You may need to

clear filtersif you've experimented with the ArgoCD web console.The directed graph shows that the

namespace-ciArgoCD app has created 4 Kubernetes resources; thecinamespace and three role bindings.Clearly the

cinamespace is the primary resource managed by thenamespace-ciArgoCD application.However, equally important to the

cinamespace are the role bindings associated with it. These role bindings are used to grant specific rights to service accounts associated with thecinamespace. For example, Tekton service accounts require permissions to pull images from the image registry via thesystem:image-pullerrole. -

The

namespace-ciArgoCD applicationLet's examine the ArgoCD application

namespace-cito see how it created thecinamespace and three role bindings in the cluster.Issue the following command to examine its YAML:

cat 0-bootstrap/single-cluster/1-infra/argocd/namespace-ci.yamlNotice how

apiVersionandkindidentify this as an ArgoCD application:apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: namespace-ci labels: gitops.tier.layer: infra annotations: argocd.argoproj.io/sync-wave: "100" spec: destination: namespace: ci server: https://kubernetes.default.svc project: infra source: path: namespaces/ci syncPolicy: automated: prune: true selfHeal: trueSee how this

namespace-ciArgoCD application watches the folderpath: namespaces/cifor Kubernetes resources which it can deploy to the local cluster in which ArgoCD is running i.e.server: https://kubernetes.default.svc.But to which GitHub repository does

path: namespaces/cirefer? -

A Kustomize example in practice

The answer lies in Kustomize!

Recall the

0-bootstrap/single-cluster/kustomization.yamlsnippet:resources: ... - argocd/namespace-ci.yaml ... patches: - target: group: argoproj.io kind: Application labelSelector: "gitops.tier.layer=gitops" patch: |- - op: add path: /spec/source/repoURL value: https://github.com/tutorial-org-123/multi-tenancy-gitops.git - op: add path: /spec/source/targetRevision value: masterSee how this adds (

op add) the YAML elementspec/source/repoURL: https://github.com/tutorial-org-123/multi-tenancy-gitops-infra.gitto the YAML at deployment time.This is a good example of how Kustomize works. A specific

kustomization.yamlfile is used to update thenamespace-ci.yamlfile at deployment time to add the required fields to achieve a desired behavior. -

The resultant ArgoCD

namespace-ciYAMLThe YAML for the

namespace-ciArgoCD YAML will be created at deployment time as a result of the combination of these two files:.../namespace-ci.yamlprovides the base YAML elements.0-bootstrap/single-cluster/1-infra/argocd/kustomize.yamladds the customizations for thetutorial-123-orgorganization.

Because the full ArgoCD YAML for the

namespace-ciapplication is generated at deployment time, we can use theoc getcommand to see the full YAML.Issue the following command:

oc get app/namespace-ci -n openshift-gitops -o yamlto see the ArgoCD YAML active in the cluster:

apiVersion: argoproj.io/v1alpha1 kind: Application metadata: ... labels: app.kubernetes.io/instance: infra gitops.tier.layer: infra name: namespace-ci namespace: openshift-gitops resourceVersion: "7820320" uid: 1110bf89-a032-42df-93ba-6c49e2c411e3 spec: destination: namespace: ci server: https://kubernetes.default.svc project: infra source: path: namespaces/ci repoURL: https://github.com/tutorial-org-123/multi-tenancy-gitops-infra.git targetRevision: master syncPolicy: automated: prune: true selfHeal: true ...(We've abbreviated the output to show the relevant YAML elements.)

Notice how:

- This is the YAML for an ArgoCD application as indicated by

apiVersion: argo.proj.io/v1alphaandkind:Application. - The ArgoCD application name is

namespace-ci. repoURL: https://github.com/tutorial-org-123/multi-tenancy-gitops-infra.githas been added (opp add) tonamespace-ci.yamlbykustomize.yaml.repoURLidentifies the repository where for the ArgoCD application YAML will look for the YAML that it will apply to the cluster.- Note that

repoURL:is a different to the repository containing the ArgoCD YAML. - We can think of the

multi-tenancy-gitops-infrarepository as a library to which theKustomize.yamlfile provides the parameters. path: namespaces/ciis the folder withinrepoURLthat contains the namespace YAML. (path:...was inherited in the base YAML file rather than theKustomize.yamlfile)SyncPolicyofautomatedmeans that any changes to this folder will automatically be applied to the cluster; we do not need to perform a manualSyncoperation from the ArgoCD UI.

More on ArgoCD¶

Now that we've seen how Kustomize works, let's explore a few other important aspects of ArgoCD using the ci namespace.

-

The

cinamespace YAMLThe

namespace-ciArgoCD application uses the YAML files in thenamespace/cifolder within themulti-tenancy-gitops-infrarepository. You may recall that this repository is a library repository. Let's examine thecinamespace YAML in this folder.Issue the following command:

cat $GIT_ROOT/multi-tenancy-gitops-infra/namespaces/ci/namespace.yamlIt's a very simple YAML:

apiVersion: v1 kind: Namespace metadata: name: ci spec: {}This is a YAML that we would normally apply to the cluster manually or via a script.

Notice that when we use GitOps, we push the

namespace-ciArgoCD application to GitHub and it applies thecinamespace YAML to the cluster. This is the essence of GitOps; we declare what we want to appear in the cluster using Git and ArgoCD synchronizes the cluster with this declaration. Think of the ArgoCDnamespace-ciapplication as your agent for deployment; you say what you want the cluster to look like via a git configuration, and it creates that configuration in the cluster. -

Verify the namespace using the

ocCLIWe've seen the new namespace definition in the GitOps repository and visually in the ArgoCD UI. Let's also verify it via the command line:

Type the following command:

oc get namespace ci -o yaml --show-managed-fields=trueThis will list the full details of the

cinamespace, including thef:managed fields:apiVersion: v1 kind: Namespace metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Namespace","metadata":{"annotations":{},"labels":{"app.kubernetes.io/instance":"namespace-ci"},"name":"ci"},"spec":{}} openshift.io/sa.scc.mcs: s0:c27,c9 openshift.io/sa.scc.supplemental-groups: 1000720000/10000 openshift.io/sa.scc.uid-range: 1000720000/10000 creationTimestamp: "2021-08-31T15:27:32Z" labels: app.kubernetes.io/instance: namespace-ci managedFields: - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:metadata: f:annotations: .: {} f:kubectl.kubernetes.io/last-applied-configuration: {} f:labels: .: {} f:app.kubernetes.io/instance: {} f:status: f:phase: {} manager: argocd-application-controller operation: Update time: "2021-08-31T15:27:32Z" - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:metadata: f:annotations: f:openshift.io/sa.scc.mcs: {} f:openshift.io/sa.scc.supplemental-groups: {} f:openshift.io/sa.scc.uid-range: {} manager: cluster-policy-controller operation: Update time: "2021-08-31T15:27:32Z" name: ci resourceVersion: "2255607" selfLink: /api/v1/namespaces/ci uid: fff6b82b-6318-4828-83bb-ade4e8e3c0cf spec: finalizers: - kubernetes status: phase: ActiveNotice how

manager: argocd-application-controlleridentifies that this namespace was created by ArgoCD. That's because ArgoCD was the issuer of theoc applycommand that deployed thenamespace.yamlto the cluster.Let's recall the set of actions that got us to this point. We started by manually deploying the

bootstrap-single-clusterArgoCD application, which ultimately resulted in the creation of thenamespace-ciArgoCD application which created thecinamespace. -

Reviewing the cluster changes using GitHub

Once the

bootstrap-single-clusterArgoCD application has been applied to the cluster, we never need to interact with the cluster directly to change its state. After this first manual step, the cluster state is determined by a set of ArgoCD applications which look afterapplication, service or infrastructure resources. It's these ArgoCD applications that create and manage the underlying Kubernetes resources using the GitOps repository as the source of truth. By interacting with git usinggit commit,git pushandgit mergecommands we can change the state of the cluster. Let's have a look at our cluster's history of updates using GitHub.Issue the following command to determine the URL that shows the GitHub updates:

echo https://github.com/$GIT_ORG/multi-tenancy-gitops/commits/masterCopy the resultant URL to your browser:

You can see the list of changes made to the cluster; we have a full audit provided through our use of Git and GitOps.

See how once the

bootstrap-single-clusterArgoCD application has been applied to the cluster, we never to interact with the cluster directly. After this first manual step, the cluster state is governed by a set of ArgoCD applications which look after application, service and infrastructure resources. These ArgoCD applications use the GitOps repository as the source of truth. By interacting with git usingcommit,pushandmergecommands we say what we want the desired cluster state to be, and ArgoCD makes it happen. -

Examine the

cirolebinding YAMLNow that we've seen how the namespace was created, let's see how the three other rolebindings were created by the

namespace-ciArgoCD application.In the same folder as the

cinamespace YAML, there is arolebinding.yamlfile. This file will be also applied to the cluster by thenamespace-ciArgoCD application which is continuously watching this folder.Examine this file with the following command:

cat $GIT_ROOT/multi-tenancy-gitops-infra/namespaces/ci/rolebinding.yamlThis YAML is slightly more complex than the namespace YAML:

apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: system:image-puller-dev namespace: ci roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:image-puller subjects: - apiGroup: rbac.authorization.k8s.io kind: Group name: system:serviceaccounts:dev --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: system:image-puller-staging namespace: ci roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:image-puller subjects: - apiGroup: rbac.authorization.k8s.io kind: Group name: system:serviceaccounts:staging --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: system:image-puller-prod namespace: ci roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:image-puller subjects: - apiGroup: rbac.authorization.k8s.io kind: Group name: system:serviceaccounts:prod ---If you look carefully and you'll see that there are indeed three rolebindings defined in this YAML. This confirms why we saw four resources created by the

namespace-ciArgoCD application in the ArgoCD UI: one namespace and three rolebindings.Each of these

rolebindings associates different roles to the different service accounts associated with components that will run within thecinamespace, such as Tekton pipelines for example. We'll see later how these rolebindings limit the operations that can be performed by thecinamespace, creating a well governed cluster.Again, notice the pattern: a single ArgoCD application manages one or more Kubernetes resources in the cluster -- using one or more YAML files in which those resources are defined. All the time we're creating a more responsive system that is also well governed.

Managing change¶

We end this topic by exploring how ArgoCD provides some advanced resource management features. We'll focus on two aspects of how to manage resources:

-

Dynamic: We'll see how ArgoCD allows us to easily and quickly update any resource in the cluster. We're focussing on infrastructure components like namespaces, but the same principles will apply to applications, databases, workflow engines and messaging systems -- any resource within the cluster.

-

Governed: While we want to be agile, we also want a well governed system. As we've seen, GitOps allows us to define the state of the cluster from a git repository -- but can we also ensure that the cluster stays that way? We'll see how ArgoCD helps with configuration drift -- ensuring that a cluster only changes when properly approved.

Dynamic updates¶

-

Locate your GitOps repository

If necessary, change to the root of your GitOps repository, which is stored in the

$GIT_ROOTenvironment variable.Issue the following command to change to your GitOps repository:

cd $GIT_ROOT cd multi-tenancy-gitops -

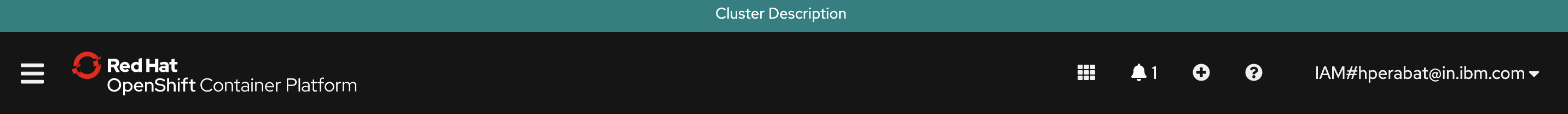

Customize the web console banner

Examine the banner in the OpenShift web console:

We're going to use GitOps to modify this banner dynamically.

-

The banner YAML

This banner properties are defined by the YAML in

/0-bootstrap/single-cluster/1-infra/argocd/consolenotification.yaml. This YAML is currently being used by thecntk-consolenotificationArgoCD application that was deployed earlier.We can examine the YAML with the following command:

cat 0-bootstrap/single-cluster/1-infra/argocd/consolenotification.yamlwhich shows the banner properties are part of the

ConsoleNotificationcustom resource:apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: cntk-consolenotification labels: gitops.tier.layer: infra annotations: argocd.argoproj.io/sync-wave: "100" finalizers: - resources-finalizer.argocd.argoproj.io spec: syncPolicy: automated: prune: true selfHeal: true destination: namespace: openshift-gitops server: https://kubernetes.default.svc project: infra source: path: consolenotification helm: values: | ocp-console-notification: ## The name of the ConsoleNotification resource in the cluster name: "banner-env" ## The background color that should be used for the banner backgroundColor: teal ## The color of the text that will appear in the banner color: "'#fff'" ## The location of the banner. Options: BannerTop, BannerBottom, BannerTopBottom location: BannerTop ## The text that should be displayed in the banner. This value is required for the banner to be created text: "Cluster Description"See how the banner at the top of the screen:

- contains the text

Cluster Description - is located at top of the screen

- has the color

teal

- contains the text

-

Modify the YAML for this banner

Let's now change this YAML

In your editor, modify this YAML and change the below fields as follows:

ocp-console-notification: ## The name of the ConsoleNotification resource in the cluster name: "banner-env" ## The background color that should be used for the banner backgroundColor: red ## The color of the text that will appear in the banner color: "'#fff'" ## The location of the banner. Options: BannerTop, BannerBottom, BannerTopBottom location: BannerTop ## The text that should be displayed in the banner. This value is required for the banner to be created text: "Production Reference Guide"It's clear that our intention is to modify the banner's

backgroundColor:redandtext: Production Reference Guideto the newly specified values. If you look at thediff:git diffyou should see the following:

diff --git a/0-bootstrap/single-cluster/1-infra/argocd/consolenotification.yaml b/0-bootstrap/single-cluster/1-infra/argocd/consolenotification.yaml index 30adf1a..596e821 100644 --- a/0-bootstrap/single-cluster/1-infra/argocd/consolenotification.yaml +++ b/0-bootstrap/single-cluster/1-infra/argocd/consolenotification.yaml @@ -26,10 +26,10 @@ spec: name: "banner-env" ## The background color that should be used for the banner - backgroundColor: teal + backgroundColor: red ## The color of the text that will appear in the banner color: "'#fff'" ## The location of the banner. Options: BannerTop, BannerBottom, BannerTopBottom location: BannerTop ## The text that should be displayed in the banner. This value is required for the banner to be created - text: "Cluster Description" + text: "Production Reference Guide" -

Make the web console YAML change active

Let's make these changes visible to the

cntk-consolenotificationArgoCD application via GitHub.Add all changes in the current folder to a git index, commit them, and push them to GitHub:

git add . git commit -s -m "Modify console banner" git push origin $GIT_BRANCHYou'll see the changes being pushed to GitHub:

Enumerating objects: 13, done. Counting objects: 100% (13/13), done. Delta compression using up to 8 threads Compressing objects: 100% (7/7), done. Writing objects: 100% (7/7), 670 bytes | 670.00 KiB/s, done. Total 7 (delta 5), reused 0 (delta 0) remote: Resolving deltas: 100% (5/5), completed with 5 local objects. To https://github.com/tutorial-org-123/multi-tenancy-gitops.git a1e8292..b49dff5 master -> masterLet's see what effect they have on the web console.

-

A dynamic change to the web console

You can either wait for ArgoCD to automatically sync the

cntk-consolenotificationapplication or manuallyRefreshandSynctheinfraapplication yourself:

Returning to the OpenShift web console, you'll notice changes.

Notice the dynamic nature of these changes; we updated the console YAML, pushed our changes to our GitOps repository and everything else happened automatically.

As a result, our OpenShift console has a new banner color and text. This is a simple yet effective demonstration of how we can quickly rollout changes very visible changes.

Configuration drift¶

-

Governing changes to the

devnamespaceLet's now look at how ArgoCD monitors Kubernetes resources for configuration drift, and what happens if it detects an unexpected change to a monitored resource.

Don't worry about the following command; it might seem drastic and even reckless, but as you'll see, everything will be OK.

Let's delete the

devnamespace from the cluster:oc get namespace dev oc delete namespace devSee how the active namespace:

NAME STATUS AGE dev Active 2d18his deleted:

namespace "dev" deletedWe can see that the

devnamespace has been manually deleted from the cluster. -

GitOps repository as a source of truth

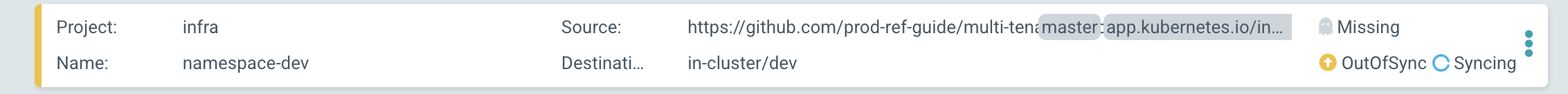

If you switch back to the ArgoCD UI, you may see that ArgoCD has detected a configuration drift:

- a resource is

Missing(thedevnamespace) namespace-devtherefore isOutOfSyncnamespace-devis thereforeSyncingwith the GitOps repository

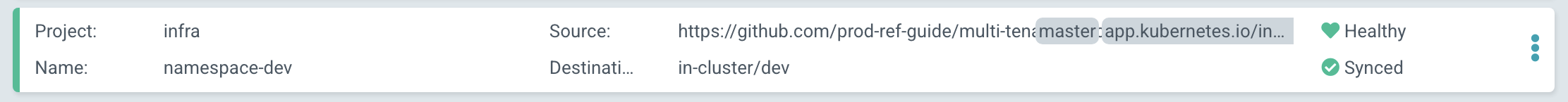

After a while we'll see that

namespace-devisHealthyandSynced:

ArgoCD has detected a configuration drift, and resynched with GitOps repository, re-applying the

devnamespace to the cluster.Note

You may not seethe first screenshot if ArgoCD detects and corrects the missing

devnamespace before you get a chance to switch to the ArgoCD UI. Don't worry, you can try thedeleteoperation again! - a resource is

-

The restored

devnamespaceIssue the following command to determine the status of the

devnamespace:oc get namespace devwhich confirms that the

devnamespace has been re-instated:NAME STATUS AGE dev Active 115sNote that it is a different instance of the

devnamespace; as indicated by itsAGEvalue.Notice the well governed nature of these changes; GitOps is our source of truth about the resources deployed to the cluster. ArgoCD restores any resources that suffer from configuration drift to their GitOps-defined configuration. It doesn't really matter whether the change was accidental or not, ArgoCD considers any change to a managed resource (e.g. the

devnamespace) as invalid unless it's synced with its source of truth -- your GitOps repository.We'll see more about well governed changes when we look at Tekton pipelines that build and test any changes before they are deployed.

Success

"Congratulations!" You've used ArgoCD and the GitOps repository to set up infrastructure resources such as the ci, tools and dev namespaces. You've seen how ArgoCD applications watch their respective GitOps namespace folders for resources they should apply to the cluster. You've seen how you can dynamically change deployed resources by updating the resource definition in the GitOps repository. Finally, you've experienced how ArgoCD keeps the cluster synchronized with the GitOps repository as a source of truth; any unexpected configuration drift will be corrected without intervention.

In the next section of this tutorial, we're going to deploy services alongside infrastructure resources created in this topic.